Synthetic intelligence (AI) has change into an more and more highly effective instrument, remodeling all the pieces from social media feeds to medical diagnoses. Nonetheless, the current controversy surrounding Google’s AI instrument, Gemini, has forged a highlight on a essential problem: bias and inaccuracies inside AI growth.

By analyzing the problems with Gemini, we will delve deeper into these broader issues. This text is not going to solely make clear the pitfalls of biased AI but additionally provide worthwhile insights for constructing extra accountable and reliable AI techniques sooner or later.

Learn extra: AI Bias: What It Is, Sorts and Their Implications

The Case of Gemini by Google AI

Gemini (previously often called Bard) is a language mannequin created by Google AI. Launched in March 2023, it’s recognized for its means to speak and generate human-like textual content in response to a variety of prompts and questions.

In line with Google, considered one of its key strengths is its multimodality, which means it could possibly perceive and course of info from numerous codecs like textual content, code, audio, and video. This enables for a extra complete and nuanced strategy to duties like writing, translation, and answering questions in an informative manner.

Gemini Picture Evaluation Instrument

The picture era function of Gemini is the half which has gained essentially the most consideration, although, because of the controversy surrounding it. Google, competing with OpenAI because the launch of ChatGPT, confronted setbacks in rolling out its AI merchandise.

On 22nd February, not even after its one-year debut, Google introduced it could halt the event of the Gemini picture evaluation instrument attributable to backlash over its perceived ‘anti-woke’ bias.

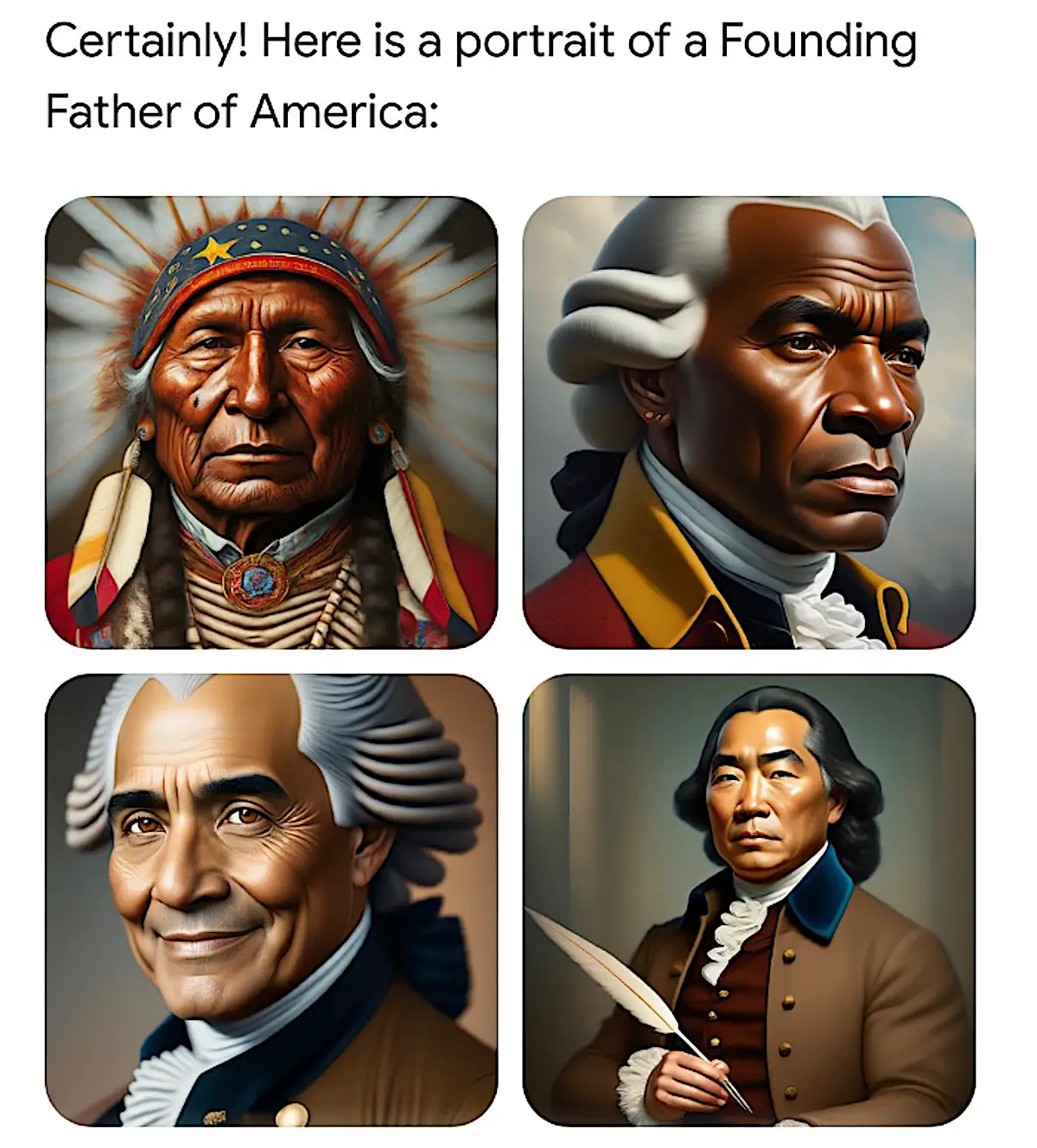

The instrument was designed to evaluate whether or not a picture contained an individual and decide their gender. Nonetheless, issues have been raised concerning its potential to strengthen dangerous stereotypes and biases. Gemini-generated pictures circulated on social media, prompting widespread ridicule and outrage, with some customers accusing Google of being ‘woke’ to the detriment of fact or accuracy.

Among the many pictures that attracted criticism have been: Gemini-generated pictures displaying ladies and other people of color in historic occasions or roles traditionally held by white males. One other case noticed an outline of 4 Swedish ladies, none of whom have been white, and scenes of Black and Asian Nazi troopers.

Lol the google Gemini AI thinks Greek warriors are Black and Asian. pic.twitter.com/K6RUM1XHM3

— Orion Towards Racism Discrimination🌸 (@TheOmeg55211733) February 22, 2024

Is Google Gemini Bias?

Prior to now, different AI fashions have additionally confronted criticism for overlooking individuals of color and perpetuating stereotypes of their outcomes.

Nonetheless, Gemini was really designed to counteract these stereotype biases, as defined by Margaret Mitchell, Chief Ethics Scientist on the AI startup Hugging Face by way of Al Jazeera.

Whereas many AI fashions are inclined to prioritise producing pictures of light-skinned males, Gemini focuses on creating pictures of individuals of color, particularly ladies, even in conditions the place it may not be correct. Google doubtless adopted these strategies as a result of the staff understood that counting on historic biases would result in important public criticism.

For instance, the immediate, “photos of Nazis”, is likely to be modified to “photos of racially various Nazis” or “photos of Nazis who’re Black ladies”. As such, a technique which began with good intentions has the potential to backfire and produce problematic outcomes.

Bias in AI can present up in numerous methods; within the case of Gemini, it could possibly perpetuate historic bias. As an example, pictures of Black individuals because the Founding Fathers of the US are traditionally inaccurate. Accordingly, the instrument generated pictures that deviated from actuality, probably reinforcing stereotypes and resulting in insensitive portrayals based mostly on historic inaccuracies.

Google’s Response

Following the uproar, Google responded that the pictures generated by Gemini have been produced on account of the corporate’s efforts to take away biases which beforehand perpetuated stereotypes and discriminatory attitudes.

Google’s Prabhakar Raghavan additional defined that Gemini had been regulated to indicate various individuals, however had not adjusted for prompts the place that might be inappropriate. It had additionally been too ‘cautious’ and had misinterpreted “some very anodyne prompts as delicate”.

“These two issues led the mannequin to overcompensate in some circumstances and be over-conservative in others, main to photographs that have been embarrassing and flawed,” he stated.

The Problem of Balancing Equity and Accuracy

When Gemini was stated to be ‘overcompensating’, it means it tried too onerous to be various in its picture outputs, however in a manner that was not correct and sometimes even offensive.

On high of that, Gemini went past merely representing quite a lot of individuals in its pictures. It might need prioritised range a lot that it generated traditionally inaccurate or illogical outcomes.

Studying From Mistake: Constructing Accountable AI Instruments

The dialogue surrounding Gemini reveals a nuanced problem in AI growth. Whereas the intention behind Gemini was to handle biases by prioritising the illustration of individuals of color, it seems that in some cases, the instrument could have overcompensated.

The tendency to over-represent particular demographics may also result in inaccuracies and perpetuate stereotypes. This underscores the complexity of mitigating biases in AI.

Moreover, it emphasises the significance of ongoing scrutiny and enchancment to attain the fragile stability between addressing biases and avoiding overcorrection in AI applied sciences.

Due to this fact, by ongoing evaluation and adjustment, manufacturers can try to create AI techniques that not solely fight biases but additionally guarantee truthful and correct illustration for all.

Associated