Record three belongings you’ve accomplished this yr that pertain to SEO (Search engine marketing).

Do these ways revolve round key phrase analysis, meta descriptions, and backlinks?

In that case, you’re not alone. In terms of Search engine marketing, these methods are normally the primary ones entrepreneurs add to their arsenal.

Whereas these methods do enhance your web site’s visibility in natural search, they’re not the one ones you need to be using. There’s one other set of ways that fall below the Search engine marketing umbrella.

Technical Search engine marketing refers back to the behind-the-scenes parts that energy your natural development engine, resembling web site structure, cellular optimization, and web page pace. These features of Search engine marketing won’t be the sexiest, however they’re extremely essential.

Step one in enhancing your technical Search engine marketing is understanding the place you stand by performing a web site audit. The second step is to create a plan to deal with the areas the place you fall brief. We’ll cowl these steps in-depth under.

Professional tip: Create an internet site designed to transform utilizing HubSpot’s free CMS instruments.

What’s technical Search engine marketing?

Technical Search engine marketing refers to something you try this makes your web site simpler for search engines like google and yahoo to crawl and index. Technical Search engine marketing, content material technique, and link-building methods all work in tandem to assist your pages rank extremely in search.

Technical Search engine marketing vs. On-Web page Search engine marketing vs. Off-Web page Search engine marketing

Many individuals break down SEO (Search engine marketing) into three completely different buckets: on-page Search engine marketing, off-page Search engine marketing, and technical Search engine marketing. Let’s rapidly cowl what every means.

On-Web page Search engine marketing

On-page Search engine marketing refers back to the content material that tells search engines like google and yahoo (and readers!) what your web page is about, together with picture alt textual content, key phrase utilization, meta descriptions, H1 tags, URL naming, and inner linking. You could have probably the most management over on-page Search engine marketing as a result of, properly, the whole lot is on your web site.

Off-Web page Search engine marketing

Off-page Search engine marketing tells search engines like google and yahoo how well-liked and helpful your web page is thru votes of confidence — most notably backlinks, or hyperlinks from different websites to your personal. Backlink amount and high quality enhance a web page’s PageRank. All issues being equal, a web page with 100 related hyperlinks from credible websites will outrank a web page with 50 related hyperlinks from credible websites (or 100 irrelevant hyperlinks from credible websites.)

Technical Search engine marketing

Technical Search engine marketing is inside your management as properly, however it’s a bit trickier to grasp because it’s much less intuitive.

Why is technical Search engine marketing essential?

You might be tempted to disregard this part of Search engine marketing fully; nonetheless, it performs an essential position in your natural site visitors. Your content material may be probably the most thorough, helpful, and well-written, however except a search engine can crawl it, only a few folks will ever see it.

It’s like a tree that falls within the forest when nobody is round to listen to it … does it make a sound? With no robust technical Search engine marketing basis, your content material will make no sound to search engines like google and yahoo.

Let’s focus on how one can make your content material resound via the web.

Understanding Technical Search engine marketing

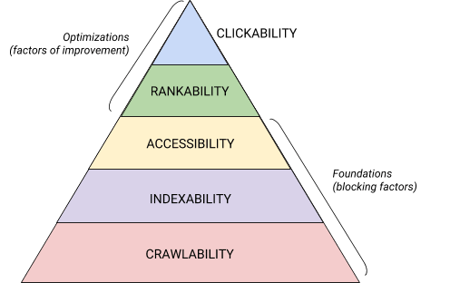

Technical Search engine marketing is a beast that’s finest damaged down into digestible items. If you happen to’re like me, you wish to deal with massive issues in chunks and with checklists. Consider it or not, the whole lot we’ve coated thus far will be positioned into certainly one of 5 classes, every of which deserves its personal checklist of actionable objects.

These 5 classes and their place within the technical Search engine marketing hierarchy is finest illustrated by this lovely graphic that’s harking back to Maslov’s Hierarchy of Wants however remixed for SEO. (Notice that we’ll use the generally used time period “Rendering” instead of Accessibility.)

Technical Search engine marketing Audit Fundamentals

Earlier than you start together with your technical Search engine marketing audit, there are a number of fundamentals that you want to put in place.

Let’s cowl these technical Search engine marketing fundamentals earlier than we transfer on to the remainder of your web site audit.

Audit Your Most well-liked Area

Your area is the URL that individuals sort to reach in your web site, like hubspot.com. Your web site area impacts whether or not folks can discover you thru search and supplies a constant option to determine your web site.

When you choose a most well-liked area, you’re telling search engines like google and yahoo whether or not you favor the www or non-www model of your web site to be displayed within the search outcomes. For instance, you would possibly choose www.yourwebsite.com over yourwebsite.com. This tells search engines like google and yahoo to prioritize the www model of your web site and redirects all customers to that URL. In any other case, search engines like google and yahoo will deal with these two variations as separate websites, leading to dispersed Search engine marketing worth.

Beforehand, Google requested you to determine the model of your URL that you simply favor. Now, Google will determine and choose a model to indicate searchers for you. Nevertheless, in the event you favor to set the popular model of your area, then you are able to do so via canonical tags (which we’ll cowl shortly). Both means, when you set your most well-liked area, ensure that all variants, that means www, non-www, http, and index.html, all completely redirect to that model.

Implement SSL

You’ll have heard this time period earlier than — that’s as a result of it’s fairly essential. SSL, or Safe Sockets Layer, creates a layer of safety between the net server (the software program liable for fulfilling an internet request) and a browser, thereby making your web site safe. When a person sends info to your web site, like cost or contact data, that info is much less more likely to be hacked as a result of you’ve got SSL to guard them.

An SSL certificates is denoted by a website that begins with “https://” versus “http://” and a lock image within the URL bar.

Serps prioritize safe websites — in truth, Google introduced as early as 2014 that SSL could be thought-about a rating issue. Due to this, be sure you set the SSL variant of your homepage as your most well-liked area.

After you arrange SSL, you’ll have to migrate any non-SSL pages from http to https. It’s a tall order, however well worth the effort within the identify of improved rating. Listed below are the steps you want to take:

- Redirect all http://yourwebsite.com pages to https://yourwebsite.com.

- Replace all canonical and hreflang tags accordingly.

- Replace the URLs in your sitemap (situated at yourwebsite.com/sitemap.xml) and your robotic.txt (situated at yourwebsite.com/robots.txt).

- Arrange a brand new occasion of Google Search Console and Bing Webmaster Instruments on your https web site and observe it to ensure 100% of the site visitors migrates over.

Optimize Web page Velocity

Have you learnt how lengthy an internet site customer will wait on your web site to load? Six seconds … and that’s being beneficiant. Some knowledge exhibits that the bounce fee will increase by 90% with a rise in web page load time from one to 5 seconds. You don’t have one second to waste, so enhancing your web site load time must be a precedence.

Web site pace isn’t simply essential for person expertise and conversion — it’s additionally a rating issue.

Use the following pointers to enhance your common web page load time:

- Compress your whole recordsdata. Compression reduces the dimensions of your pictures, in addition to CSS, HTML, and JavaScript recordsdata, in order that they take up much less area and cargo quicker.

- Audit redirects repeatedly. A 301 redirect takes a number of seconds to course of. Multiply that over a number of pages or layers of redirects, and also you’ll significantly impression your web site pace.

- Trim down your code. Messy code can negatively impression your web site pace. Messy code means code that is lazy. It is like writing — perhaps within the first draft, you make your level in 6 sentences. Within the second draft, you make it in 3. The extra environment friendly code is, the extra rapidly the web page will load (typically). When you clear issues up, you’ll minify and compress your code.

- Contemplate a content material distribution community (CDN). CDNs are distributed net servers that retailer copies of your web site in numerous geographical areas and ship your web site based mostly on the searcher’s location. For the reason that info between servers has a shorter distance to journey, your web site masses quicker for the requesting occasion.

- Strive to not go plugin comfortable. Outdated plugins usually have safety vulnerabilities that make your web site inclined to malicious hackers who can hurt your web site’s rankings. Be sure you’re at all times utilizing the newest variations of plugins and reduce your use to probably the most important. In the identical vein, think about using custom-made themes, as pre-made web site themes usually include loads of pointless code.

- Reap the benefits of cache plugins. Cache plugins retailer a static model of your web site to ship to returning customers, thereby reducing the time to load the location throughout repeat visits.

- Use asynchronous (async) loading. Scripts are directions that servers have to learn earlier than they will course of the HTML, or physique, of your webpage, i.e. the issues guests wish to see in your web site. Sometimes, scripts are positioned within the <head> of an internet site (suppose: your Google Tag Supervisor script), the place they’re prioritized over the content material on the remainder of the web page. Utilizing async code means the server can course of the HTML and script concurrently, thereby reducing the delay and rising web page load time.

Right here’s how an async script appears to be like: <script async src=”script.js“></script>

If you wish to see the place your web site falls brief within the pace division, you should utilize this useful resource from Google.

Upon getting your technical Search engine marketing fundamentals in place, you are prepared to maneuver onto the subsequent stage — crawlability.

Crawlability Guidelines

Crawlability is the inspiration of your technical Search engine marketing technique. Search bots will crawl your pages to assemble details about your web site.

If these bots are by some means blocked from crawling, they will’t index or rank your pages. Step one to implementing technical Search engine marketing is to make sure that your whole essential pages are accessible and simple to navigate.

Beneath we’ll cowl some objects so as to add to your guidelines in addition to some web site parts to audit to make sure that your pages are prime for crawling.

Crawlability Guidelines

- Create an XML sitemap.

- Maximize your crawl price range.

- Optimize your web site structure.

- Set a URL construction.

- Make the most of robots.txt.

- Add breadcrumb menus.

- Use pagination.

- Test your Search engine marketing log recordsdata.

1. Create an XML sitemap.

Do not forget that web site construction we went over? That belongs in one thing referred to as an XML Sitemap that helps search bots perceive and crawl your net pages. You possibly can consider it as a map on your web site. You’ll submit your sitemap to Google Search Console and Bing Webmaster Instruments as soon as it’s full. Keep in mind to maintain your sitemap up-to-date as you add and take away net pages.

2. Maximize your crawl price range.

Your crawl price range refers back to the pages and assets in your web site search bots will crawl.

As a result of crawl price range isn’t infinite, ensure you’re prioritizing your most essential pages for crawling.

Listed below are a number of ideas to make sure that you’re maximizing your crawl price range:

- Take away or canonicalize duplicate pages.

- Repair or redirect any damaged hyperlinks.

- Ensure that your CSS and Javascript recordsdata are crawlable.

- Test your crawl stats repeatedly and look ahead to sudden dips or will increase.

- Ensure that any bot or web page you’ve disallowed from crawling is supposed to be blocked.

- Hold your sitemap up to date and submit it to the suitable webmaster instruments.

- Prune your web site of pointless or outdated content material.

- Be careful for dynamically generated URLs, which may make the variety of pages in your web site skyrocket.

3. Optimize your web site structure.

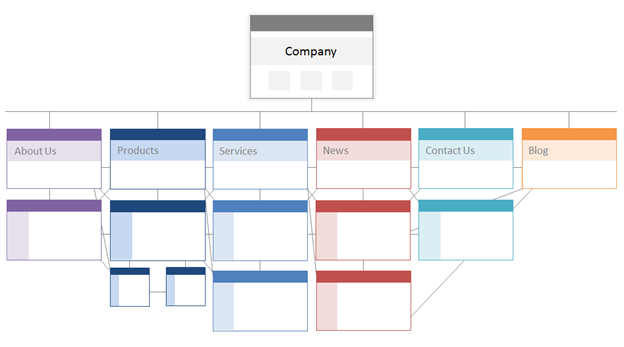

Your web site has a number of pages. These pages should be organized in a means that permits search engines like google and yahoo to simply discover and crawl them. That’s the place your web site construction — sometimes called your web site’s info structure — is available in.

In the identical means {that a} constructing relies on architectural design, your web site structure is the way you arrange the pages in your web site.

Associated pages are grouped collectively; for instance, your weblog homepage hyperlinks to particular person weblog posts, which every hyperlink to their respective creator pages. This construction helps search bots perceive the connection between your pages.

Your web site structure also needs to form, and be formed by, the significance of particular person pages. The nearer Web page A is to your homepage, the extra pages hyperlink to Web page A, and the extra hyperlink fairness these pages have, the extra significance search engines like google and yahoo will give to Web page A.

For instance, a hyperlink out of your homepage to Web page A demonstrates extra significance than a hyperlink from a weblog put up. The extra hyperlinks to Web page A, the extra “important” that web page turns into to search engines like google and yahoo.

Conceptually, a web site structure might look one thing like this, the place the About, Product, Information, and so forth. pages are positioned on the high of the hierarchy of web page significance.

Ensure that a very powerful pages to your small business are on the high of the hierarchy with the best variety of (related!) inner hyperlinks.

4. Set a URL construction.

URL construction refers to the way you construction your URLs, which could possibly be decided by your web site structure. I’ll clarify the connection in a second. First, let’s make clear that URLs can have subdirectories, like weblog.hubspot.com, and/or subfolders, like hubspot.com/weblog, that point out the place the URL leads.

For example, a weblog put up titled Learn how to Groom Your Canine would fall below a weblog subdomain or subdirectory. The URL may be www.bestdogcare.com/weblog/how-to-groom-your-dog. Whereas a product web page on that very same web site could be www.bestdogcare.com/merchandise/grooming-brush.

Whether or not you utilize subdomains or subdirectories or “merchandise” versus “retailer” in your URL is solely as much as you. The fantastic thing about creating your personal web site is that you may create the foundations. What’s essential is that these guidelines comply with a unified construction, that means that you simply shouldn’t swap between weblog.yourwebsite.com and yourwebsite.com/blogs on completely different pages. Create a roadmap, apply it to your URL naming construction, and keep on with it.

Listed below are a number of extra tips on easy methods to write your URLs:

- Use lowercase characters.

- Use dashes to separate phrases.

- Make them brief and descriptive.

- Keep away from utilizing pointless characters or phrases (together with prepositions).

- Embrace your goal key phrases.

Upon getting your URL construction buttoned up, you’ll submit a listing of URLs of your essential pages to search engines like google and yahoo within the type of an XML sitemap. Doing so offers search bots extra context about your web site in order that they don’t must determine it out as they crawl.

5. Make the most of robots.txt.

When an online robotic crawls your web site, it can first test the /robotic.txt, in any other case often called the Robotic Exclusion Protocol. This protocol can permit or disallow particular net robots to crawl your web site, together with particular sections and even pages of your web site. If you happen to’d like to forestall bots from indexing your web site, you’ll use a noindex robots meta tag. Let’s focus on each of those situations.

You might wish to block sure bots from crawling your web site altogether. Sadly, there are some bots on the market with malicious intent — bots that may scrape your content material or spam your neighborhood boards. If you happen to discover this dangerous conduct, you’ll use your robotic.txt to forestall them from coming into your web site. On this situation, you’ll be able to consider robotic.txt as your pressure discipline from dangerous bots on the web.

Relating to indexing, search bots crawl your web site to assemble clues and discover key phrases to allow them to match your net pages with related search queries. However, as we’ll focus on later, you’ve got a crawl price range that you simply don’t wish to spend on pointless knowledge. So, you could wish to exclude pages that don’t assist search bots perceive what your web site is about, for instance, a Thank You web page from a proposal or a login web page.

It doesn’t matter what, your robotic.txt protocol will probably be distinctive relying on what you’d like to perform.

6. Add breadcrumb menus.

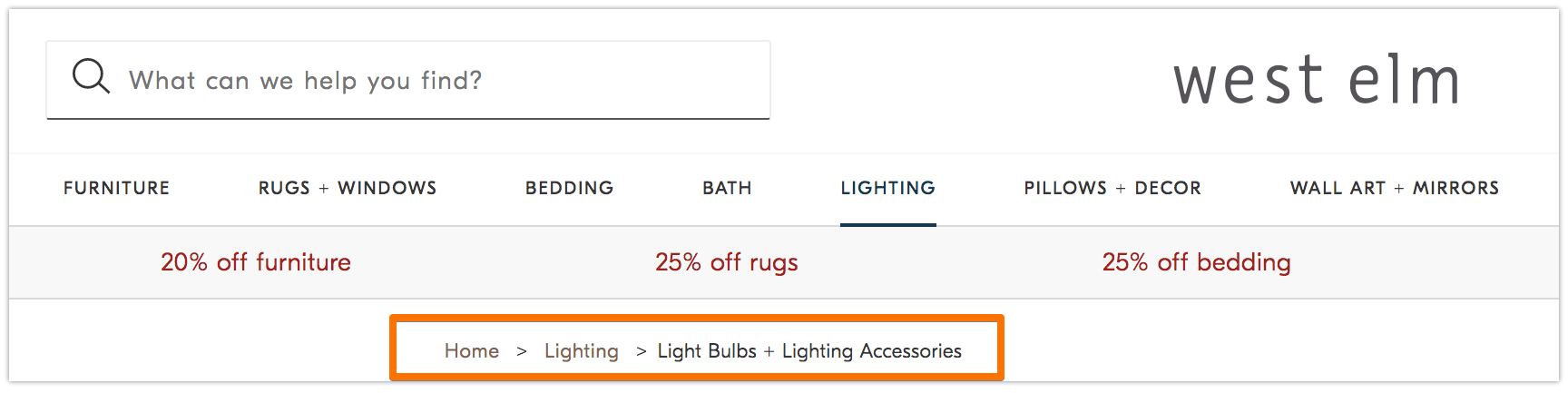

Keep in mind the previous fable Hansel and Gretel the place two youngsters dropped breadcrumbs on the bottom to seek out their means again house? Nicely, they had been on to one thing.

Breadcrumbs are precisely what they sound like — a path that guides customers to again to the beginning of their journey in your web site. It’s a menu of pages that tells customers how their present web page pertains to the remainder of the location.

They usually aren’t only for web site guests; search bots use them, too.

Breadcrumbs must be two issues: 1) seen to customers to allow them to simply navigate your net pages with out utilizing the Again button, and a pair of) have structured markup language to present correct context to look bots which are crawling your web site.

Unsure easy methods to add structured knowledge to your breadcrumbs? Use this information for BreadcrumbList.

7. Use pagination.

Keep in mind when academics would require you to quantity the pages in your analysis paper? That’s referred to as pagination. On the planet of technical Search engine marketing, pagination has a barely completely different position however you’ll be able to nonetheless consider it as a type of group.

Pagination makes use of code to inform search engines like google and yahoo when pages with distinct URLs are associated to one another. For example, you could have a content material sequence that you simply break up into chapters or a number of webpages. If you wish to make it straightforward for search bots to find and crawl these pages, you then’ll use pagination.

The best way it really works is fairly easy. You’ll go to the <head> of web page one of many sequence and use

rel=”subsequent” to inform the search bot which web page to crawl second. Then, on web page two, you’ll use rel=”prev” to point the prior web page and rel=”subsequent” to point the next web page, and so forth.

It appears to be like like this…

On web page one:

<hyperlink rel=“subsequent” href=“https://www.web site.com/page-two” />

On web page two:

<hyperlink rel=“prev” href=“https://www.web site.com/page-one” />

<hyperlink rel=“subsequent” href=“https://www.web site.com/page-three” />

Notice that pagination is beneficial for crawl discovery, however is not supported by Google to batch index pages because it as soon as was.

8. Test your Search engine marketing log recordsdata.

You possibly can consider log recordsdata like a journal entry. Internet servers (the journaler) report and retailer log knowledge about each motion they take in your web site in log recordsdata (the journal). The information recorded consists of the time and date of the request, the content material requested, and the requesting IP deal with. It’s also possible to determine the person agent, which is a uniquely identifiable software program (like a search bot, for instance) that fulfills the request for a person.

However what does this must do with Search engine marketing?

Nicely, search bots go away a path within the type of log recordsdata after they crawl your web site. You possibly can decide if, when, and what was crawled by checking the log recordsdata and filtering by the person agent and search engine.

This info is beneficial to you as a result of you’ll be able to decide how your crawl price range is spent and which obstacles to indexing or entry a bot is experiencing. To entry your log recordsdata, you’ll be able to both ask a developer or use a log file analyzer, like Screaming Frog.

Simply because a search bot can crawl your web site doesn’t essentially imply that it could actually index your whole pages. Let’s check out the subsequent layer of your technical Search engine marketing audit — indexability.

Indexability Guidelines

As search bots crawl your web site, they start indexing pages based mostly on their matter and relevance to that matter. As soon as listed, your web page is eligible to rank on the SERPs. Listed below are a number of components that may assist your pages get listed.

Indexability Guidelines

- Unblock search bots from accessing pages.

- Take away duplicate content material.

- Audit your redirects.

- Test the mobile-responsiveness of your web site.

- Repair HTTP errors.

1. Unblock search bots from accessing pages.

You’ll probably deal with this step when addressing crawlability, however it’s price mentioning right here. You wish to be sure that bots are despatched to your most well-liked pages and that they will entry them freely. You could have a number of instruments at your disposal to do that. Google’s robots.txt tester provides you with a listing of pages which are disallowed and you should utilize the Google Search Console’s Examine device to find out the reason for blocked pages.

2. Take away duplicate content material.

Duplicate content material confuses search bots and negatively impacts your indexability. Keep in mind to make use of canonical URLs to determine your most well-liked pages.

3. Audit your redirects.

Confirm that your whole redirects are arrange correctly. Redirect loops, damaged URLs, or — worse — improper redirects could cause points when your web site is being listed. To keep away from this, audit your whole redirects repeatedly.

4. Test the mobile-responsiveness of your web site.

In case your web site is just not mobile-friendly by now, you then’re far behind the place you want to be. As early as 2016, Google began indexing cellular websites first, prioritizing the cellular expertise over desktop. At this time, that indexing is enabled by default. To maintain up with this essential development, you should utilize Google’s mobile-friendly check to test the place your web site wants to enhance.

5. Repair HTTP errors.

HTTP stands for HyperText Switch Protocol, however you in all probability don’t care about that. What you do care about is when HTTP returns errors to your customers or to search engines like google and yahoo, and easy methods to repair them.

HTTP errors can impede the work of search bots by blocking them from essential content material in your web site. It’s, due to this fact, extremely essential to deal with these errors rapidly and completely.

Since each HTTP error is exclusive and requires a selected decision, the part under has a quick rationalization of every, and also you’ll use the hyperlinks supplied to study extra about or easy methods to resolve them.

- 301 Everlasting Redirects are used to completely ship site visitors from one URL to a different. Your CMS will permit you to arrange these redirects, however too many of those can decelerate your web site and degrade your person expertise as every extra redirect provides to web page load time. Purpose for zero redirect chains, if potential, as too many will trigger search engines like google and yahoo to surrender crawling that web page.

- 302 Short-term Redirect is a option to briefly redirect site visitors from a URL to a unique webpage. Whereas this standing code will routinely ship customers to the brand new webpage, the cached title tag, URL, and outline will stay in keeping with the origin URL. If the momentary redirect stays in place lengthy sufficient, although, it can ultimately be handled as a everlasting redirect and people parts will cross to the vacation spot URL.

- 403 Forbidden Messages imply that the content material a person has requested is restricted based mostly on entry permissions or as a consequence of a server misconfiguration.

- 404 Error Pages inform customers that the web page they’ve requested doesn’t exist, both as a result of it’s been eliminated or they typed the improper URL. It’s at all times a good suggestion to create 404 pages which are on-brand and interesting to maintain guests in your web site (click on the hyperlink above to see some good examples).

- 405 Technique Not Allowed signifies that your web site server acknowledged and nonetheless blocked the entry methodology, leading to an error message.

- 500 Inner Server Error is a normal error message which means your net server is experiencing points delivering your web site to the requesting occasion.

- 502 Dangerous Gateway Error is expounded to miscommunication, or invalid response, between web site servers.

- 503 Service Unavailable tells you that whereas your server is functioning correctly, it’s unable to meet the request.

- 504 Gateway Timeout means a server didn’t obtain a well timed response out of your net server to entry the requested info.

Regardless of the purpose for these errors, it’s essential to deal with them to maintain each customers and search engines like google and yahoo comfortable, and to maintain each coming again to your web site.

Even when your web site has been crawled and listed, accessibility points that block customers and bots will impression your Search engine marketing. That mentioned, we have to transfer on to the subsequent stage of your technical Search engine marketing audit — renderability.

Renderability Guidelines

Earlier than we dive into this matter, it’s essential to notice the distinction between Search engine marketing accessibility and net accessibility. The latter revolves round making your net pages straightforward to navigate for customers with disabilities or impairments, like blindness or Dyslexia, for instance. Many parts of on-line accessibility overlap with Search engine marketing finest practices. Nevertheless, an Search engine marketing accessibility audit doesn’t account for the whole lot you’d have to do to make your web site extra accessible to guests who’re disabled.

We’re going to give attention to Search engine marketing accessibility, or rendering, on this part, however maintain net accessibility high of thoughts as you develop and preserve your web site.

Renderability Guidelines

An accessible web site relies on ease of rendering. Beneath are the web site parts to evaluation on your renderability audit.

Server Efficiency

As you discovered above, server timeouts and errors will trigger HTTP errors that hinder customers and bots from accessing your web site. If you happen to discover that your server is experiencing points, use the assets supplied above to troubleshoot and resolve them. Failure to take action in a well timed method can lead to search engines like google and yahoo eradicating your net web page from their index as it’s a poor expertise to indicate a damaged web page to a person.

HTTP Standing

Just like server efficiency, HTTP errors will stop entry to your webpages. You need to use an online crawler, like Screaming Frog, Botify, or DeepCrawl to carry out a complete error audit of your web site.

Load Time and Web page Dimension

In case your web page takes too lengthy to load, the bounce fee is just not the one drawback you need to fear about. A delay in web page load time can lead to a server error that may block bots out of your webpages or have them crawl partially loaded variations which are lacking essential sections of content material. Relying on how a lot crawl demand there may be for a given useful resource, bots will spend an equal quantity of assets to try to load, render, and index pages. Nevertheless, you must do the whole lot in your management to lower your web page load time.

JavaScript Rendering

Google admittedly has a tough time processing JavaScript (JS) and, due to this fact, recommends using pre-rendered content material to enhance accessibility. Google additionally has a host of assets that can assist you perceive how search bots entry JS in your web site and easy methods to enhance search-related points.

Orphan Pages

Each web page in your web site must be linked to a minimum of one different web page — ideally extra, relying on how essential the web page is. When a web page has no inner hyperlinks, it’s referred to as an orphan web page. Like an article with no introduction, these pages lack the context that bots want to know how they need to be listed.

Web page Depth

Web page depth refers to what number of layers down a web page exists in your web site construction, i.e. what number of clicks away out of your homepage it’s. It’s finest to maintain your web site structure as shallow as potential whereas nonetheless sustaining an intuitive hierarchy. Typically a multi-layered web site is inevitable; in that case, you’ll wish to prioritize a well-organized web site over shallowness.

No matter what number of layers in your web site construction, maintain essential pages — like your product and phone pages — not more than three clicks deep. A construction that buries your product web page so deep in your web site that customers and bots have to play detective to seek out them are much less accessible and supply a poor expertise

For instance, an internet site URL like this that guides your target market to your product web page is an instance of a poorly deliberate web site construction: www.yourwebsite.com/products-features/features-by-industry/airlines-case-studies/airlines-products.

Redirect Chains

While you resolve to redirect site visitors from one web page to a different, you’re paying a value. That value is crawl effectivity. Redirects can decelerate crawling, scale back web page load time, and render your web site inaccessible if these redirects aren’t arrange correctly. For all of those causes, attempt to maintain redirects to a minimal.

As soon as you have addressed accessibility points, you’ll be able to transfer onto how your pages rank within the SERPs.

Rankability Guidelines

Now we transfer to the extra topical parts that you simply’re in all probability already conscious of — easy methods to enhance rating from a technical Search engine marketing standpoint. Getting your pages to rank includes among the on-page and off-page parts that we talked about earlier than however from a technical lens.

Do not forget that all of those parts work collectively to create an Search engine marketing-friendly web site. So, we’d be remiss to go away out all of the contributing components. Let’s dive into it.

Inner and Exterior Linking

Hyperlinks assist search bots perceive the place a web page suits within the grand scheme of a question and provides context for easy methods to rank that web page. Hyperlinks information search bots (and customers) to associated content material and switch web page significance. Total, linking improves crawling, indexing, and your skill to rank.

Backlink High quality

Backlinks — hyperlinks from different websites again to your personal — present a vote of confidence on your web site. They inform search bots that Exterior Web site A believes your web page is high-quality and price crawling. As these votes add up, search bots discover and deal with your web site as extra credible. Feels like an amazing deal proper? Nevertheless, as with most nice issues, there’s a caveat. The standard of these backlinks matter, loads.

Hyperlinks from low-quality websites can truly damage your rankings. There are various methods to get high quality backlinks to your web site, like outreach to related publications, claiming unlinked mentions, offering related publications, claiming unlinked mentions, and offering useful content material that different websites wish to hyperlink to.

Content material Clusters

We at HubSpot haven’t been shy about our love for content material clusters or how they contribute to natural development. Content material clusters hyperlink associated content material so search bots can simply discover, crawl, and index all the pages you personal on a specific matter. They act as a self-promotion device to indicate search engines like google and yahoo how a lot a few matter, so they’re extra more likely to rank your web site as an authority for any associated search question.

Your rankability is the principle determinant in natural site visitors development as a result of research present that searchers are extra more likely to click on on the highest three search outcomes on SERPs. However how do you make sure that yours is the outcome that will get clicked?

Let’s spherical this out with the ultimate piece to the natural site visitors pyramid: clickability.

Clickability Guidelines

Whereas click-through fee (CTR) has the whole lot to do with searcher conduct, there are issues you can do to enhance your clickability on the SERPs. Whereas meta descriptions and web page titles with key phrases do impression CTR, we’re going to give attention to the technical parts as a result of that’s why you’re right here.

Clickability Guidelines

- Use structured knowledge.

- Win SERP options.

- Optimize for Featured Snippets.

- Contemplate Google Uncover.

Rating and click-through fee go hand-in-hand as a result of, let’s be sincere, searchers need quick solutions. The extra your outcome stands out on the SERP, the extra probably you’ll get the press. Let’s go over a number of methods to enhance your clickability.

1. Use structured knowledge.

Structured knowledge employs a selected vocabulary referred to as schema to categorize and label parts in your webpage for search bots. The schema makes it crystal clear what every component is, the way it pertains to your web site, and easy methods to interpret it. Mainly, structured knowledge tells bots, “This can be a video,” “This can be a product,” or “This can be a recipe,” leaving no room for interpretation.

To be clear, utilizing structured knowledge is just not a “clickability issue” (if there even is such a factor), however it does assist arrange your content material in a means that makes it straightforward for search bots to know, index, and doubtlessly rank your pages.

2. Win SERP options.

SERP options, in any other case often called wealthy outcomes, are a double-edged sword. If you happen to win them and get the click-through, you’re golden. If not, your natural outcomes are pushed down the web page beneath sponsored adverts, textual content reply packing containers, video carousels, and the like.

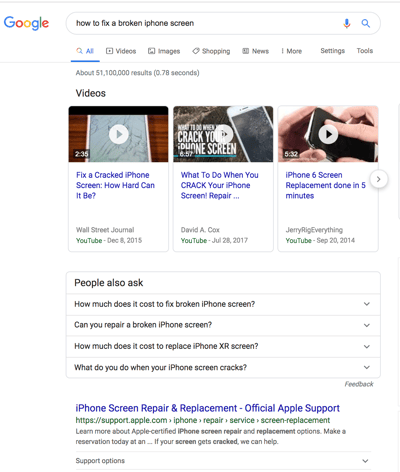

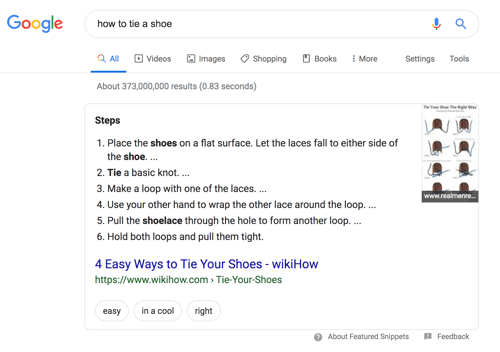

Wealthy outcomes are these parts that don’t comply with the web page title, URL, meta description format of different search outcomes. For instance, the picture under exhibits two SERP options — a video carousel and “Folks Additionally Ask” field — above the primary natural outcome.

When you can nonetheless get clicks from showing within the high natural outcomes, your likelihood is drastically improved with wealthy outcomes.

How do you improve your probabilities of incomes wealthy outcomes? Write helpful content material and use structured knowledge. The better it’s for search bots to know the weather of your web site, the higher your probabilities of getting a wealthy outcome.

Structured knowledge is beneficial for getting these (and different search gallery parts) out of your web site to the highest of the SERPs, thereby, rising the chance of a click-through:

- Articles

- Movies

- Evaluations

- Occasions

- How-Tos

- FAQs (“Folks Additionally Ask” packing containers)

- Pictures

- Native Enterprise Listings

- Merchandise

- Sitelinks

3. Optimize for Featured Snippets.

One unicorn SERP function that has nothing to do with schema markup is Featured Snippets, these packing containers above the search outcomes that present concise solutions to look queries.

Featured Snippets are meant to get searchers the solutions to their queries as rapidly as potential. In keeping with Google, offering the very best reply to the searcher’s question is the one option to win a snippet. Nevertheless, HubSpot’s analysis revealed a number of extra methods to optimize your content material for featured snippets.

4. Contemplate Google Uncover.

Google Uncover is a comparatively new algorithmic itemizing of content material by class particularly for cellular customers. It’s no secret that Google has been doubling down on the cellular expertise; with over 50% of searches coming from cellular, it’s no shock both. The device permits customers to construct a library of content material by deciding on classes of curiosity (suppose: gardening, music, or politics).

At HubSpot, we imagine matter clustering can improve the probability of Google Uncover inclusion and are actively monitoring our Google Uncover site visitors in Google Search Console to find out the validity of that speculation. We suggest that you simply additionally make investments a while in researching this new function. The payoff is a extremely engaged person base that has mainly hand-selected the content material you’ve labored onerous to create.

The Good Trio

Technical Search engine marketing, on-page Search engine marketing, and off-page Search engine marketing work collectively to unlock the door to natural site visitors. Whereas on-page and off-page methods are sometimes the primary to be deployed, technical Search engine marketing performs a vital position in getting your web site to the highest of the search outcomes and your content material in entrance of your ultimate viewers. Use these technical ways to spherical out your Search engine marketing technique and watch the outcomes unfold.