Studying Time: 10 minutes

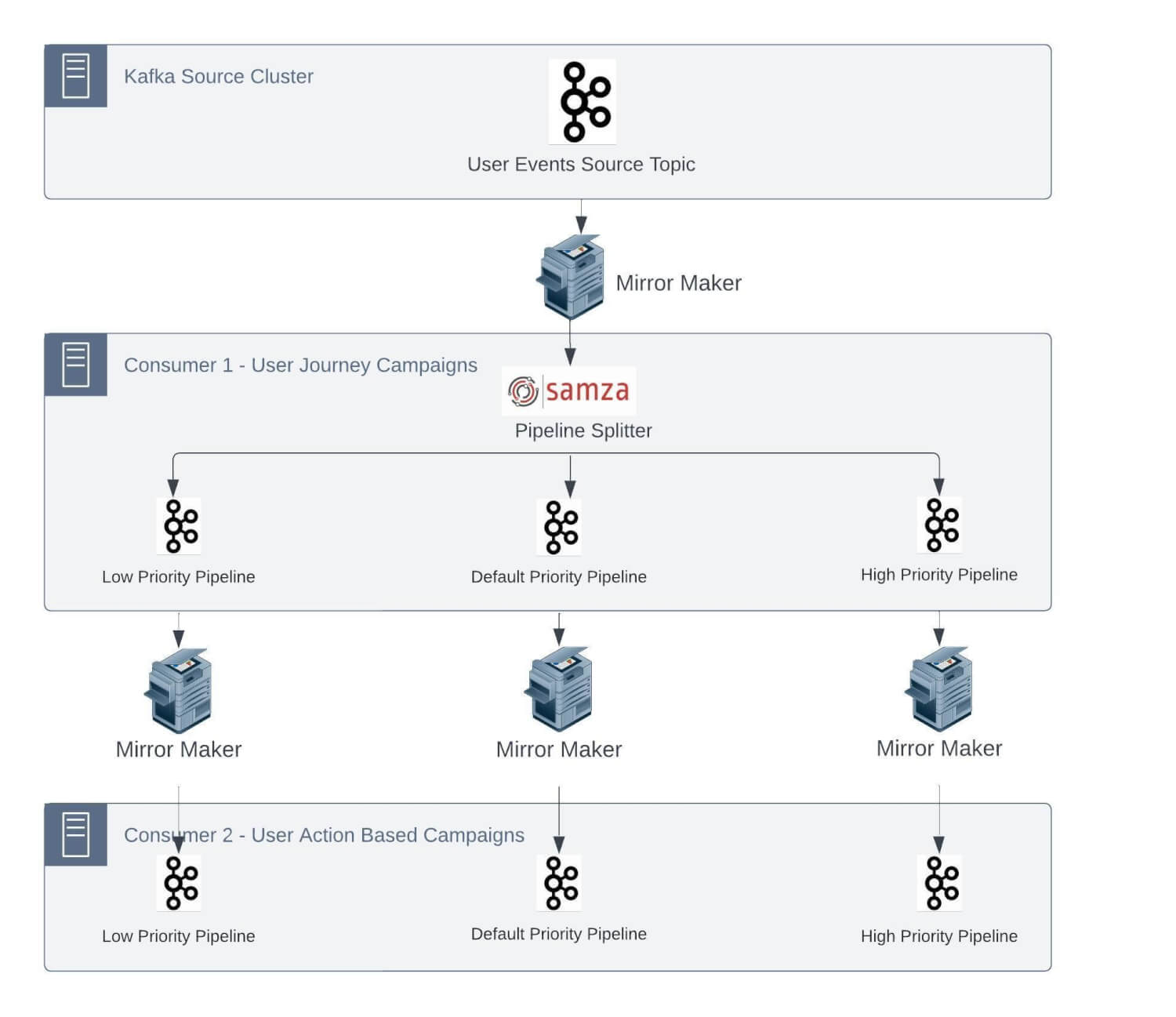

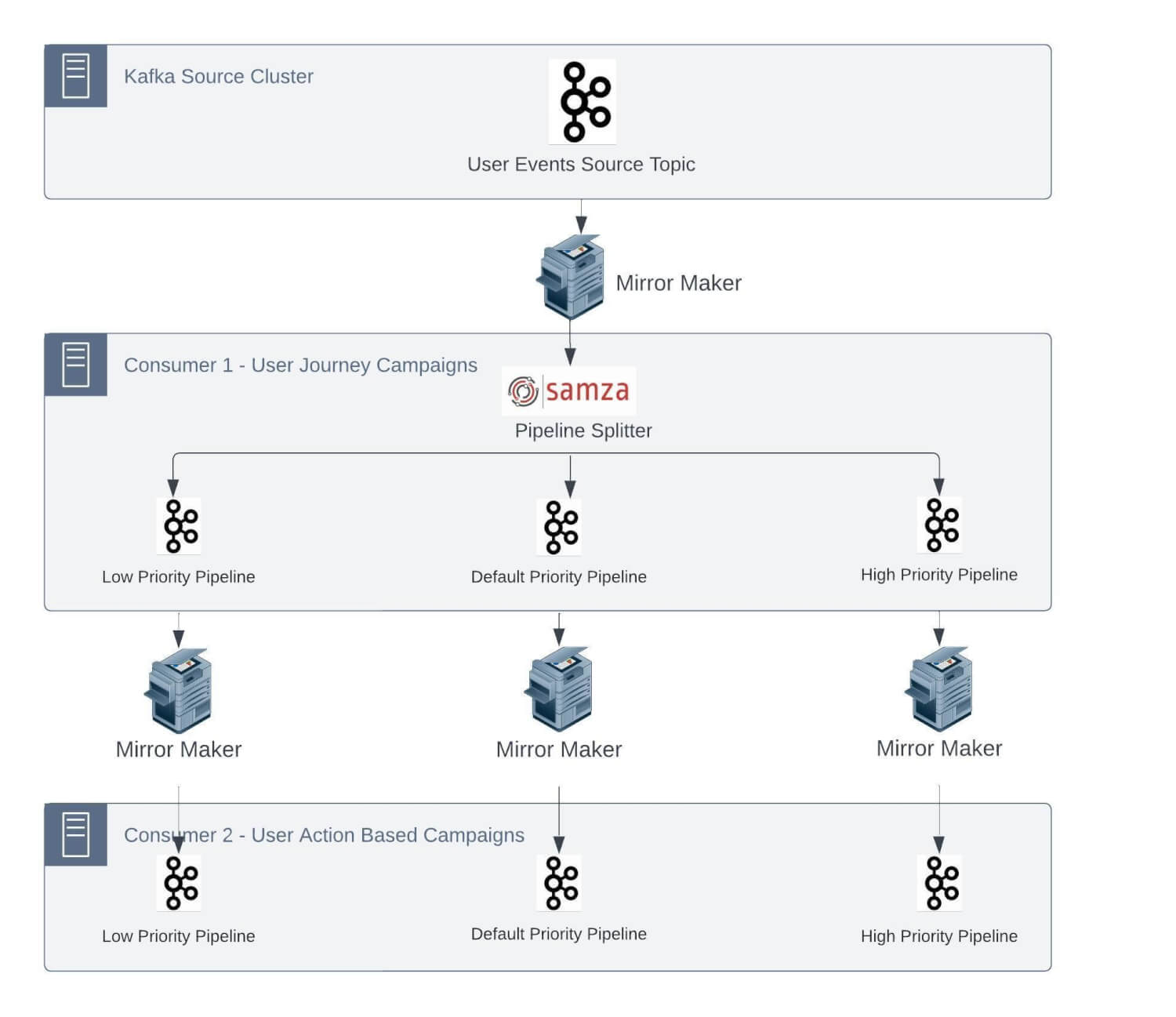

Actual-time streaming serves because the spine of the MoEngage product options. Proper from the early days of 2015-16, we’ve got extensively used Apache Kafka and Apache Samza for real-time occasions processing for each stateless and stateful knowledge pipelines.

Over the interval of the final 8 years, we’ve got seen each the evolution of our personal product and a multifold improve within the scale of information processing wants.

There have been a number of learnings with operating and working a big set of Kafka clusters together with Samza functions. We’ve got carried out many upgrades and restructures to attain the most effective performances from these programs for our use instances.

Earlier, we printed our studying of managing huge Kafka clusters Kafka Redesign and Classes Realized. Presently, we’ve got a number of knowledge facilities throughout geographies in AWS and Azure. We function with greater than 10 clusters in every knowledge heart.

On this put up, we’re writing about how we’ve got been enhancing and additional restructuring one of many greatest Kafka clusters.

State of Kafka Clusters

We’ve got devoted Kafka clusters for varied enterprise use instances based mostly on our product options and shopper necessities.

This cluster handles site visitors of some million occasions per minute. There are a number of business-critical jobs which are deployed as Samza functions. For these functions, streaming pipelines are anticipated to work with a Service Degree Settlement (SLA) in single-digit seconds for end-to-end processing.

An instance use case for this type of strict SLA is time-critical actions/notifications despatched to clients at any time when they undergo a journey on an E-commerce web site. One other instance might be sending a transactional OTP after the client accesses a security-enabled characteristic on the shopper web site/cell app for identification re-verification.

The Want for Restructuring Kafka Clusters

Primarily based on strict SLAs at our knowledge quantity, we would have liked to enhance our Kafka infrastructure. One of many greatest Kafka clusters we function is ‘Kafka-automation’. We comply with the nomenclature of naming Kafka clusters based mostly on the area. We just lately restructured this cluster for higher efficiency. This internally serves a number of microservices and streaming jobs required to help this use case.

As talked about, our streaming knowledge pipeline consists of Kafka and Samza stack for processing and clever ETL of event-based knowledge. This stack has some inherent limitations, which acquired aggravated because the variety of jobs and site visitors on every job elevated over time.

As most of those jobs have quite a lot of legacy code to allow the characteristic set and preserve SLAs, it’s not possible to completely change this structure. We are going to now go deeper into a few of the vital challenges we have been dealing with:

1. One-to-one mapping of supply subject partitions with the variety of Samza containers

As talked about earlier, we’ve got a number of stateful jobs. These Samza jobs have the inner state as changelog subjects within the Kafka cluster. Being a stateful utility, a problem will come up to course of the occasion in an outlined SLA within the case of a changelog subject that doesn’t have the required state and must make a community name to a Database to retrieve the state.

We run Samza on yarn, and every container processes the occasions from a single partition of the Kafka subject to maintain the end-to-end processing time as little as attainable. Samza course of and window features comply with single-thread semantics.

Now, let’s take a situation: assume that the common time spent processing a message in stateful functions is 5 ms. Primarily based on this, the utmost throughput from a partition might be 200 messages per second. So, if we’ve got to course of 100K msg/sec, it might require 500 partitions within the supply subject.

Contemplating our progress price and to deal with the height situations, we repartitioned this specific subject with 600 partitions within the Kafka cluster.

We use Rocksdb because the native cache for Samza StreamTask. This reduces the necessity to fetch knowledge from any exterior supply at processing time and retains on getting up to date via database CDC Kafka subjects. The best way Samza operates, we have to have the related cache for occasion processing routed to the right yarn container in order that no community name is required.

This requires messages in several subjects to be produced with the identical key/identifier such that they all the time go into the identical partition quantity and forces these enter streams to have the identical variety of partitions.

So now, different ingestion CDC subjects required to replenish the Rocksdb cache should even be created with the identical variety of partitions.

These jobs can have a number of inside states, too. For instance, if an utility has 4 inside states and would have corresponding 4 changelogs, which get created with the identical variety of partitions by the Samza utility.

Particular to this use case up to now, we’ve got 1 Unified subject, 2 CDC subjects, 4 changelog subjects, and seven subjects, every with 600 partitions. As time handed, we onboarded extra Samza functions, consuming occasions from the unified stream. We additionally had low-, medium-, and high-priority subject separations, leading to much more subjects within the Kafka cluster.

This has been an operational nightmare for us, forcing upstream groups to repartition and rebalance subjects based mostly on downstream jobs to work correctly.

2. Deserted/Unused changelog subjects

Samza creates changelog subjects based mostly on its utility ID. Typically, utility IDs have to be modified resulting from model updates or inside job constraints. This leads to present changelog subjects being deserted and recreating new changelog subjects for brand spanking new utility IDs. Some jobs require frequent utility ID adjustments resulting from their nature of requirement.

By default, these changelog subjects are created as log compact subjects. Therefore, they maintain keyed messages in subjects even when these subjects are deserted and won’t be utilized in Sazma functions.

3. Brokers efficiency degradation

We began dealing with some important points with the brokers as site visitors grew over time. 1-to-1 mapping forces even subjects with smaller use instances with a low message price to be created with 600 partitions.

We reached a stage the place our Kafka cluster with 8 brokers was operating with greater than 20K+ partitions on every dealer and 100K+ partitions in whole, together with replicated partitions.

This brought about efficiency degradation for our brokers. We began dealing with the challenges mentioned under frequently.

- Too many open recordsdata errors: Every partition on the dealer has a logs listing within the file system the place it shops the messages. For each partition, brokers hold two recordsdata (one for the index and one other for appending the precise message knowledge) opened per log phase. There was greater than 300K+ recordsdata opened on every dealer. Per our earlier Kafka expertise of operating Kafka clusters, all of the brokers have been initially configured with 100K file descriptor limits. As subjects grew, the variety of file descriptors required began breaching the max restrict, and we began receiving errors for brokers being both down or restarted resulting from too many open file errors.

- Points with compaction subjects – Earlier than we dive deeper, take a look at Kafka compaction in case you are not conscious of the working dynamics of log compaction retention coverage in these posts – An investigation into Kafka Log Compaction and https://towardsdatascience.com/log-compacted-topics-in-apache-kafka-b1aa1e4665a7. Let’s perceive a few of the key configurations utilized in log compaction and the way they impacted our brokers –

-

-

phase.ms– This configuration controls the time period after which Kafka will pressure the log to roll even when the phase file isn’t full to make sure that retention can delete or compact outdated knowledge and the default worth is 7 days. So if there are very low message in-rates, log segments are closed after days, and put up that, deletion or compaction is carried out. -

min.washer-friendly.soiled.ratio– This configuration controls how steadily the log compactor will try to wash the log (assuming log compaction is enabled). By default, we’ll keep away from cleansing a log the place greater than 50% of the log has been compacted. If there are very low in-rates in subjects, then compaction is triggered in longer intervals, and if subjects don’t have any new incoming messages, Then compaction is not going to be triggered in any respect, and messages/logs-segment will retain the desk house perpetually. -

cleanup.coverage=compact,deletevarieties of functions, you will have home windows of time with many variations of the important thing. Through the window, you solely need to retain the newest model of the important thing. Nonetheless, as soon as the window has expired, you wish to have the segments for the window deleted. With each compact and delete-enabledretention.msof the changelog could be set to a price higher than the retention of the window. Though outdated home windows received’t routinely be eliminated on expiration, the dealer will ultimately take away them because the outdated segments expire. -

cleanup coverage–compact -> deleteSome changelog subjects merely work a caching the place the state might be constructed by querying the database.

-

-

Excessive CPU utilization – With our expertise of operating a Kafka cluster, we’ve got discovered that there’s a direct relation between ProduceRequests and Latency. Larger ProduceRequests result in greater CPU utilization on brokers and elevated latency. To maintain our cluster secure, we anticipated lowering ProduceRequest counts as a lot as attainable. It may be assumed {that a} Kafka producer will generate extra ProduceRequests if a subject has extra partitions. Since we created subjects with 600 partitions and added extra subjects, we reached a stage the place Kafka brokers all the time had ~90% CPU utilization.

-

Excessive disk utilization alerts – Many subjects had retention of weeks and month(s). A variety of excessive disk utilization alerts have been brought about resulting from such subjects.

Resulting from these issues, we’ve got been bombarded by Pager Obligation alerts one after the opposite, which has brought about degradation within the high quality of service we need to preserve. We nonetheless handle the margin of security with further infra so we don’t breach any client-side SLAs. This extra margin of security has inflated the infrastructure price for the clusters.

Additional, scaling and pushing new options has been tough resulting from these points. Each time a brand new characteristic was deliberate for launch, we would have liked to do a viability research on our present infrastructure and plan in response to that. This has elevated the launch time for a few our merchandise.

Multi-pronged Options For Main Points

With operating a cluster with all of the above challenges, we realized that creating subjects with many partitions doesn’t bode nicely for upkeep and smoother operations.

We carried out a few of the options listed under to deal with the main challenges detailed within the above part:

-

We can’t get out of Samza instantly. Resulting from this, we can’t utterly resolve 1 to 1 mapping of subject partitions to Samza job containers. We determined to cut back the variety of partitions and containers on the Samza aspect and improve the processing capability of particular person containers to accommodate for the processing pace. We revisited Samza utility configurations similar to producer batch dimension, linger ms, compression sort subject replication issue, and many others. to cut back the end-to-end processing time.

We additionally segregated stateless and stateful jobs in order that we might have a simple scaling course of.

-

As talked about earlier, when the appliance ID for a Samza job is modified, a brand new set of changelog subjects is created, and older modified subjects are merely deserted.

We usually see quite a lot of changelog subjects leading to big numbers of opened recordsdata, numbers of partitions on brokers, and the dealer because the chief for partitions.

Our strategy for cleansing these subjects was easy: we listed all of the subjects that didn’t obtain any site visitors within the final week and thought of them as deserted/unused. We modified the cleanup coverage to delete and diminished retention to 1 minute.

With these adjustments, messages have been cleaned from disks, however to cut back the opened file counts, we additionally needed to eliminate these partitions-metadata from the disk too. Since we’ve got subject deletion disabled for our enterprise requirement, it’s not possible to allow subject deletion briefly by altering the dealer’s configuration and deleting them because it requires dealer restarts. So, we’ve got added a dummy dealer occasion within the cluster and moved all such deserted subjects to this dealer by lowering the replication issue to 1. With these adjustments, we’ve got cleaned up the disk house and diminished opened recordsdata from brokers considerably.

Nonetheless, a brand new problem arose when a brand new subject creation might have partitions on this dummy dealer. So we had to decide on which brokers to make use of for partition distribution to keep away from dummy brokers.

-

We additionally elevated our dealer’s file descriptor limits to cut back too many open file errors. This gave momentary aid to the on-call staff.

-

We tuned our dealer’s configuration to our latest wants. We decreased the phase.ms to 1 day for sooner deletion and early compaction triggers. We modified min.washer-friendly.soiled.ratio = 0.1 to allow an aggressive compaction technique. This diminished the disk house utilization and opened file depend. Some subjects have very massive stateful states. We began enabling each insurance policies and set cleanup.coverage=compact, delete for log compaction subjects to cut back disk house utilization additional. We additionally modified the cleanup coverage from compact to delete wherever we might reside with the roles fetching knowledge from sources like databases and never Kafka subjects on restarts. This additional diminished disk utilization.

-

To lower the latency and cut back dealer CPU utilization, we experimented each with horizontal and vertical scaling and located a threshold {that a} dealer can serve inside the desired SLA if the ProduceRequests depend stays inside a restrict and located it to be roughly 4K for our use instances. However we would have liked so as to add extra jobs and subjects shortly so horizontal scaling (including extra brokers) turned the first possibility.

Once more, horizontal scaling requires manually redistributing the partitions to newly added brokers. Excessive-volume subjects required extra time to stability. Redistributing high-volume subjects additionally diminished disk utilization on older brokers and elevated utilization on newer brokers.

-

We requested our groups to re-access retention for his or her respective jobs and convey it to the minimal attainable interval with out inflicting SLA breaches.

With all of the above options and sustaining customary practices in thoughts, we created two new Kafka clusters for stateful and stateless jobs. All the subject partitions have been reevaluated or recreated with fewer partitions and the suitable replication components wherever attainable. Submit-migration, We’ve got seen an enormous enchancment in latency and SLA adherence.

NOTE: Not detailed, however we nonetheless have a few of these challenges due to enterprise constraints, which aren’t a part of this put up.

-

We’re additionally creating subjects with greater partition counts for low-in-rate subjects.

-

We nonetheless see the appliance being modified for Samza jobs and deserted subjects on brokers.

-

A number of subjects stay the place retention is of weeks and months.

-

Samza jobs nonetheless require additional tuning, similar to batch dimension, linger ms, compression, and many others.

Conclusion

Each time there’s an ask for SLA enchancment or latency discount, we must always relook at enhancing utility code, community calls, and caching and reevaluating the processing engine itself. Rising assets like partition depend and container counts, and many others, ought to be evaluated with nice care.

With a greater understanding of Kafka utilization and Samza tuning, we have been capable of enhance the reliability of our system. We will uphold our SLA dedication to our clients way more than we did with our older cluster, and we will do it with a 40% price discount.

However many of those fixes are nonetheless not fixing the actual root explanation for issues. These have given us respiratory house and allow us to serve the shoppers rapidly.

Most issues associated to throughput and latencies are born out of Samza’s occasion processing mannequin. Limitation in parallelizing the varied operators remains to be a bottleneck for us.

We’ve got evaluated different streaming options, and stream processing with Flink appears appropriate for fixing most of our challenges. We plan to maneuver out of Samza over time to implement a long-term answer for these challenges.

Altering the stack in a single go is unimaginable for a big group like MoEngage. We’ve got internally launched Flink-based streaming PAAS for our new jobs. This implementation makes use of Kubernetes as an orchestrator. This can even assist transfer away from Yarn-based job deployments and convey service containers and streaming jobs on the identical orchestration layer. However will probably be some time earlier than we go away completely huge Samza jobs. Till then, we’ll nonetheless have to take care of and function a few of the legacy implementations.

The put up Enhancing Reliability by Restructuring Kafka Cluster on MoEngage appeared first on MoEngage.