The period of AI-generated conversational search is, apparently, right here.

On 16th December I revealed a bit about whether or not ChatGPT might pose a menace to Google, as many had been already suggesting that it would, simply two and a half weeks on from the chatbot’s launch. On the time of writing, neither Google nor Microsoft – a significant backer of ChatGPT’s dad or mum organisation, OpenAI – had indicated any plans to really combine know-how like ChatGPT into their search engines like google, and the thought appeared like a far-off risk.

Whereas ChatGPT is a formidable conversational chatbot, it has some vital drawbacks, significantly as an arbiter of information and data: giant language fashions (LLMs) like ChatGPT generally tend to “hallucinate” (the technical time period) and confidently state incorrect info, a activity that ChatGPT’s makers have known as “difficult” to repair. However the thought of a chat-based search interface has its enchantment. In my earlier article, I speculated that there may very well be a number of advantages to a conversational search assistant – like the power to ask multi-part and follow-up questions, and obtain a definitive single reply – if ChatGPT’s issues may very well be addressed.

Google at first seemed to be taking a cautious path to responding to the ‘menace’ of ChatGPT, telling employees internally that it wanted to maneuver “extra conservatively than a small startup” because of the “reputational danger” concerned. But over the previous a number of days, Microsoft and Google have engaged in an escalating conflict of AI-based search bulletins, with Google saying Bard, a conversational search agent powered by Google’s LLM, LaMDA, on 6th February, adopted instantly by Microsoft holding a press convention to point out off its personal conversational search capabilities utilizing a complicated model of ChatGPT.

Google’s preliminary hesitation over shifting too shortly with AI-based search was maybe proven to be justified when, hours earlier than an occasion wherein the corporate was attributable to demo Bard, Reuters noticed an error in one of many pattern solutions that Google had touted in its press launch. Publications shortly crammed up with headlines concerning the mistake, and Google responded by emphasising that this confirmed the necessity for “rigorous testing” of the AI know-how, which it has already begun.

However is the injury already achieved? Have Google and Microsoft over-committed themselves to a problematic know-how – or is there a means it could possibly nonetheless be helpful?

The issues with ChatGPT and LaMDA

Specialists have been stating the issues with LLMs like ChatGPT and LaMDA for a while. As I discussed earlier, ChatGPT’s personal makers admit that the chatbot “typically writes plausible-sounding however incorrect or nonsensical solutions. Fixing this problem is difficult.”

When ChatGPT was first launched, customers discovered that it could bizarrely insist on some demonstrably incorrect ‘information’: essentially the most notorious instance concerned ChatGPT insisting that the peregrine falcon was a marine mammal, however it has additionally asserted that the Royal Marines’ uniforms throughout the Napoleonic Wars had been blue (they had been purple), and that the biggest nation in Central America that isn’t Mexico is Guatemala (it’s actually Honduras). It has additionally been discovered to wrestle with maths issues, significantly when these issues are written out in phrases as a substitute of numbers – and this persevered even after OpenAI launched an upgraded model of ChatGPT with “improved accuracy and mathematical capabilities”.

Emily M. Bender, Professor of Linguistics on the College of Washington, spoke to the e-newsletter Semafor Know-how in January about why the presentation of LLMs as ‘educated AI’ is deceptive. “I fear that [large language models like ChatGPT] are oversold in ways in which encourage folks to take what they are saying as if it had been the results of understanding each question and enter textual content and reasoning over it,” she mentioned.

“In actual fact, what they’re designed to do is create believable sounding textual content, with none grounding in communicative intent or accountability for fact—all whereas reproducing the biases of their coaching information.” Bender additionally co-authored a paper on the problems with LLMs known as ‘On the Risks of Stochastic Parrots: Can Language Fashions Be Too Massive?’ alongside Timnit Gebru, the then-head of AI ethics at Google, and quite a lot of different Google employees.

Google has requisitioned a bunch of “trusted” exterior testers to feed again on the early model of Bard, whose suggestions will probably be mixed with inside testing “to verify Bard’s responses meet a excessive bar for high quality, security and groundedness in real-world info”. The search bar in Google’s demonstrations of Bard can be accompanied by a warning: “Bard could give inaccurate or inappropriate info. Your suggestions makes Bard extra useful and secure.”

Google has borne the brunt of the crucial headlines attributable to Bard’s James Webb Area Telescope (JWST) gaffe, wherein Bard erroneously said that “JWST took the very first photos of a planet outdoors of our personal photo voltaic system”. Nonetheless, Microsoft’s integration of ChatGPT into Bing – which is presently accessible on an invite-only foundation – additionally has its points.

When The Verge requested Bing’s chatbot on the day of the Microsoft press occasion what its dad or mum firm had introduced that day, it accurately said that Microsoft had launched a brand new Bing search powered by OpenAI, but additionally added that Microsoft had demonstrated its functionality for “celeb parodies”, one thing that was positively not featured within the occasion. It additionally erroneously said that Microsoft’s multibillion-dollar funding into OpenAI had been introduced that day, when it was truly introduced two weeks prior.

Quotation wanted

Microsoft’s new Bing FAQ accommodates a warning not dissimilar to Google’s Bard disclaimer: “Bing tries to maintain solutions enjoyable and factual, however given that is an early preview, it could possibly nonetheless present sudden or inaccurate outcomes primarily based on the net content material summarized, so please use your greatest judgment.”

One other query about whether or not Bing’s AI-generated responses are “at all times factual” states: “Bing goals to base all its responses on dependable sources – however AI could make errors, and third celebration content material on the web could not at all times be correct or dependable. Bing will typically misrepresent the knowledge it finds, and you may even see responses that sound convincing however are incomplete, inaccurate, or inappropriate. Use your individual judgment and double examine the information earlier than making selections or taking motion primarily based on Bing’s responses.”

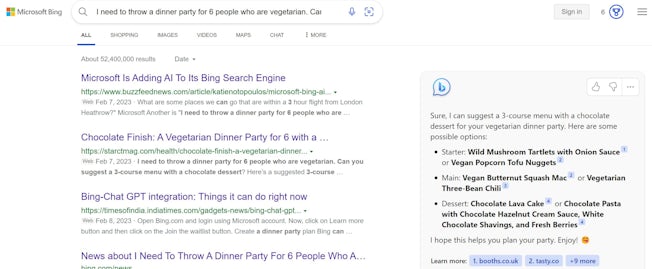

Many individuals would level out the irony of a search engine asking customers to “double examine the information”, as search engines like google are sometimes the primary place that folks go to fact-check issues. Nonetheless, to Bing’s credit score, net search outcomes are both positioned subsequent to speak responses, or can simply be toggled to within the subsequent tab. The responses from Bing’s chatbot additionally embrace citations that present the place the element originated from, along with a clickable footnote that hyperlinks to the supply; within the chat tab, sure phrases are underlined and could be hovered over to see the search end result that produced the knowledge.

Microsoft’s new chat-based Bing will place chat responses in a sidebar alongside net search outcomes, or else home them in a separate tab, whereas additionally footnoting the sources of its responses. (Picture supply: screenshot of a pre-selected demo query)

That is one thing that Bard noticeably lacks at current, which has induced severe consternation amongst publishers – each due to the implications for his or her search site visitors, and since it signifies that Bard could also be drawing info from their websites with out attributing it. One search engine marketing queried John Mueller concerning the lack of sources in Bard, in addition to asking what quantity of searches Bard will probably be accessible for – however Mueller’s response was that it’s “too early to say”, though he added that “your suggestions can assist to form the following steps”.

Satirically, together with the supply of Bard’s info might have mitigated the controversy round its James Webb Area Telescope mistake, as Marco Fonseca identified on Twitter that Bard wasn’t as far off the mark because it appeared: NASA’s web site does say that “For the primary time, astronomers have used NASA’s James Webb Area Telescope to take a direct picture of a planet outdoors our photo voltaic system” – however the important thing phrase right here is “direct”. The primary picture taken of an exoplanet on the whole was in 2004. This makes Bard’s mistake extra like an error in summarising than an outright falsehood (although it was nonetheless an error).

Within the Bard demonstrations that we’ve to date been proven, there’s sometimes a small button that seems beneath a search response labelled ‘Verify it’. It’s not clear precisely what this produces or whether or not it’s solely supposed for testers to evaluate and consider Bard’s responses, however a function that reveals how Bard assembled the knowledge for its response can be essential for transparency and fact-checking, whereas hyperlinks again to the supply would assist to revive the connection of belief with publishers.

Nonetheless, it’s additionally true that one of many important benefits of receiving a single reply in search is to keep away from having to analysis a number of sources to return to a conclusion. Along with this, many customers wouldn’t examine the originating supply(s) to grasp the place the reply got here from. Is there a solution to apply generative AI in search that avoids this problem?

An assistant, not an encyclopaedia

Throughout the interval earlier than Microsoft formally demonstrated its new Bing AI, however after the information had leaked that it was planning to launch one, there have been varied speculations about how Microsoft would combine ChatGPT’s know-how into Bing. One very attention-grabbing take got here from Mary Jo Foley, Editor-in-Chief of the unbiased web site Instructions on Microsoft, who hypothesised that Microsoft might use ChatGPT to understand an previous undertaking, the Bing Concierge Bot.

The Bing Concierge Bot undertaking that has been round since 2016, when it was described as a “productiveness agent” that will be capable of run on a wide range of messaging providers (Skype, Telegram, WhatsApp, SMS) and assist the consumer to perform duties. The undertaking reappeared once more in 2021 in a guise that sounds pretty just like ChatGPT’s present type, albeit with a a lot much less subtle chat performance; the bot might return search suggestions for merchandise resembling laptops and in addition present help with issues like Home windows upgrades.

Foley recommended that Bing might use ChatGPT not as an online search agent, however extra of a normal assistant throughout varied completely different Microsoft office providers and instruments. There’s a sense that that is the path that Microsoft is driving in: along with an AI agent to accompany net search, Microsoft has built-in ChatGPT performance into its Edge browser, which might summarise the contents of a doc for you and in addition compose textual content, for instance for a LinkedIn put up. In its FAQ, Microsoft additionally describes the “new Bing” as “like having a analysis assistant, private planner, and inventive associate at your aspect everytime you search the net”.

Certainly one of Bing’s instance queries for its chat-based search, suggesting a cocktail party menu for six vegetarian friends, illustrates how the chatbot can save time by way of a mix of thought era and analysis: it presents choices sourced from varied web sites, which the searcher can learn by way of and click on on the recipe that sounds most interesting. An article by Wired’s Aarian Marshall on how the Bing chatbot performs for varied forms of search famous that it excelled at meal plans and organising a grocery listing, though the bot was much less efficient at product searches, needing a couple of prompts to return the fitting sort of headphones, and in a single occasion surfacing discontinued merchandise.

Equally, Search Engine Land’s Nicole Farley used Bing’s chatbot to analysis close by Seattle espresso retailers and was ready to make use of the chat interface to shortly discover out the opening hours for the second listed end result – helpful. Nonetheless, when she requested the chatbot which espresso store it really useful, the bot merely generated a completely new listing of 5 outcomes – not fairly what was requested for.

The potential for the Bing chatbot to behave as an assistant to perform duties is certainly there, and for my part this may be a extra productive – and fewer dangerous – path for Microsoft to push in given LLMs’ tendency to get artistic with information. Nonetheless, Bing would wish to slim its imaginative and prescient – and the scope of its chatbot – to give attention to task-based queries, which might imply compromising its capacity to current itself as a competitor to Google in search. It’s much less glamorous than “the world’s AI-powered reply engine”, however a solution engine can be solely pretty much as good as its solutions.

What about Google’s ambition for Bard? In his presentation of the brand new chat know-how, Senior Vice President Prabhakar Raghavan indicated that Google does wish to give attention to queries which can be extra nuanced (what Google calls NORA, or ‘No One Proper Reply’) moderately than purely fact-based questions, the latter being well-served by Featured Snippets and the Information Graph. Nonetheless, he additionally waxed lyrical about Google’s capability to “Deeply perceive the world’s info”, in addition to how Bard is “Combining the world’s data with the ability of LaMDA”.

It’s in step with Google’s model to wish to current Bard as all-knowing and all-capable, however the mistake within the Bard announcement has already punctured that phantasm. Maybe the expertise will encourage Google to take a extra real looking method to Bard, no less than within the brief time period.

How a lot do SEOs and publishers want to fret?

The demonstrations from Bing and Google have undoubtedly left many SEOs and publishers questioning how a lot AI-generated chatbots are going to upend the established order. Even when an inventory of search outcomes is included alongside a chatbot reply, the chatbot reply is featured extra prominently, and it’s not altogether clear what mechanism causes sure web sites to be surfaced in a chatbot response.

Do chatbots choose from the highest rating web sites? Is it about relevance? Are some websites simpler to tug info from than others? And is that basically a very good factor, on condition that searchers studying a rehashed abstract of a web site’s key factors in a chatbot response could not hassle to click on by way of to the originating web site? Can AI-generated chatbot solutions be optimised for?

There are a number of unanswered questions that would turn into extra pressing – however provided that AI-powered search catches on. Bing is just rolling out its new search expertise in a restricted trend (once more, cognisant of the dangers concerned) and Google, whereas having promised to launch Bard to the general public “within the coming weeks” has noticeably averted committing to a launch date. The opinions coming from those that have tried out Bing’s new chat expertise are blended, and it’s not altogether clear that LLMs supply a greater search expertise than could be obtained with a couple of human queries.

Satya Nadella, in his introduction to Microsoft’s presentation of the AI-powered Bing, known as it “excessive time” that search was innovated on. To make sure, there’s a rising sense that the expertise of search has acquired progressively ‘worse’ over the previous a number of years – simply Google (or Bing, should you fancy) “search has acquired worse” to see the proof of this sentiment across the net. What’s not sure is whether or not generative AI is the answer to this drawback, or whether or not it’ll add to it.

A 12 months in generative AI: sorting the potential from the hype