The journey from a code’s inception to its supply is stuffed with challenges—bugs, safety vulnerabilities, and tight supply timelines. The normal strategies of tackling these challenges, equivalent to handbook code critiques or bug monitoring methods, now seem sluggish amid the rising calls for of right now’s fast-paced technological panorama. Product managers and their groups should discover a delicate equilibrium between reviewing code, fixing bugs, and including new options to deploy high quality software program on time. That’s the place the capabilities of enormous language fashions (LLMs) and synthetic intelligence (AI) can be utilized to research extra data in much less time than even probably the most knowledgeable group of human builders may.

Rushing up code critiques is among the best actions to enhance software program supply efficiency, in response to Google’s State of DevOps Report 2023. Groups which have efficiently applied sooner code assessment methods have 50% greater software program supply efficiency on common. Nonetheless, LLMs and AI instruments able to aiding in these duties are very new, and most firms lack enough steerage or frameworks to combine them into their processes.

In the identical report from Google, when firms had been requested concerning the significance of various practices in software program growth duties, the typical rating they assigned to AI was 3.3/10. Tech leaders perceive the significance of sooner code assessment, the survey discovered, however don’t know learn how to leverage AI to get it.

With this in thoughts, my group at Code We Belief and I created an AI-driven framework that screens and enhances the pace of high quality assurance (QA) and software program growth. By harnessing the ability of supply code evaluation, this strategy assesses the standard of the code being developed, classifies the maturity degree of the event course of, and gives product managers and leaders with precious insights into the potential value reductions following high quality enhancements. With this data, stakeholders could make knowledgeable selections relating to useful resource allocation, and prioritize initiatives that drive high quality enhancements.

Low-quality Software program Is Costly

Quite a few components influence the associated fee and ease of resolving bugs and defects, together with:

- Bug severity and complexity.

- Stage of the software program growth life cycle (SDLC) wherein they’re recognized.

- Availability of assets.

- High quality of the code.

- Communication and collaboration throughout the group.

- Compliance necessities.

- Influence on customers and enterprise.

- Testing surroundings.

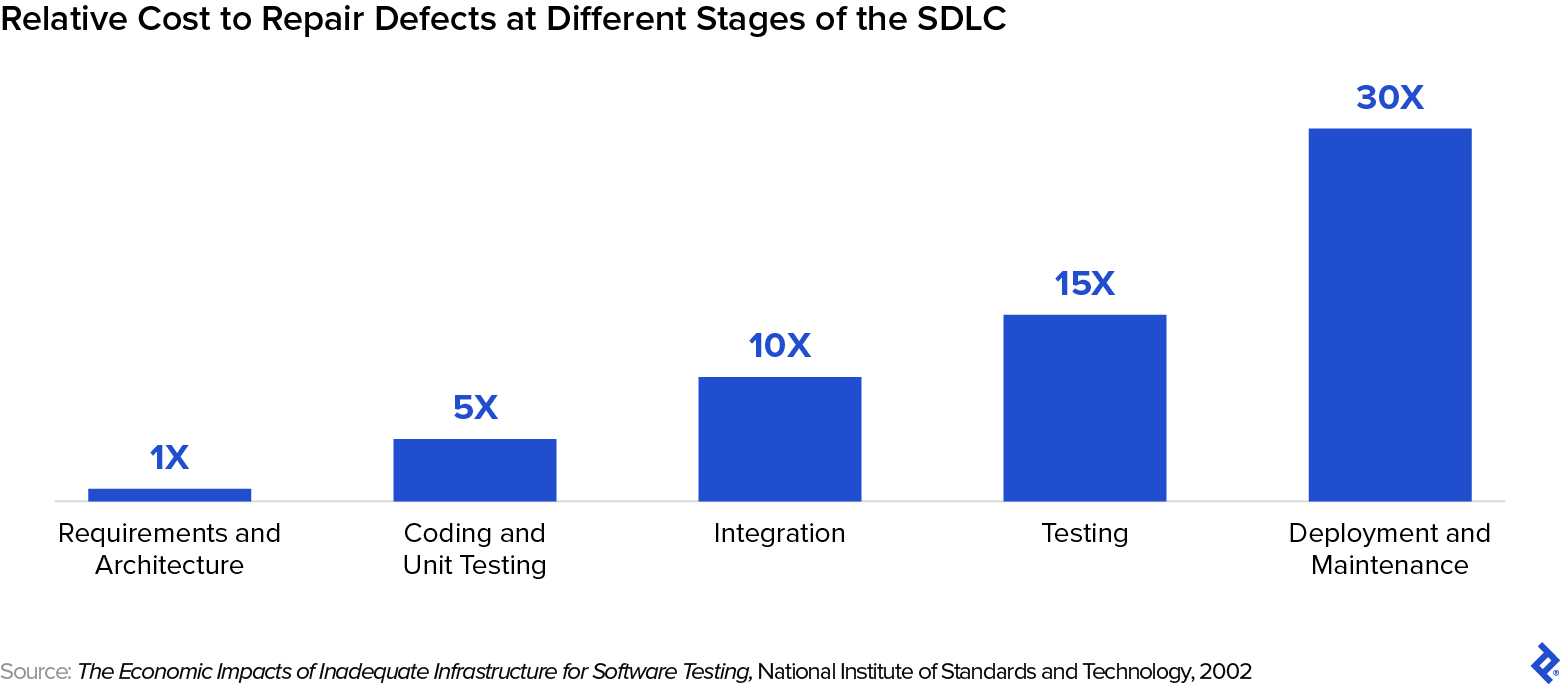

This host of components makes calculating software program growth prices straight through algorithms difficult. Nonetheless, the price of figuring out and rectifying defects in software program tends to extend exponentially because the software program progresses by means of the SDLC.

The Nationwide Institute of Requirements and Know-how reported that the price of fixing software program defects discovered throughout testing is 5 occasions greater than fixing one recognized throughout design—and the associated fee to repair bugs discovered throughout deployment could be six occasions greater than that.

Clearly, fixing bugs through the early phases is cheaper and environment friendly than addressing them later. The industrywide acceptance of this precept has additional pushed the adoption of proactive measures, equivalent to thorough design critiques and sturdy testing frameworks, to catch and proper software program defects on the earliest phases of growth.

By fostering a tradition of steady enchancment and studying by means of a speedy adoption of AI, organizations will not be merely fixing bugs—they’re cultivating a mindset that continuously seeks to push the boundaries of what’s achievable in software program high quality.

Implementing AI in High quality Assurance

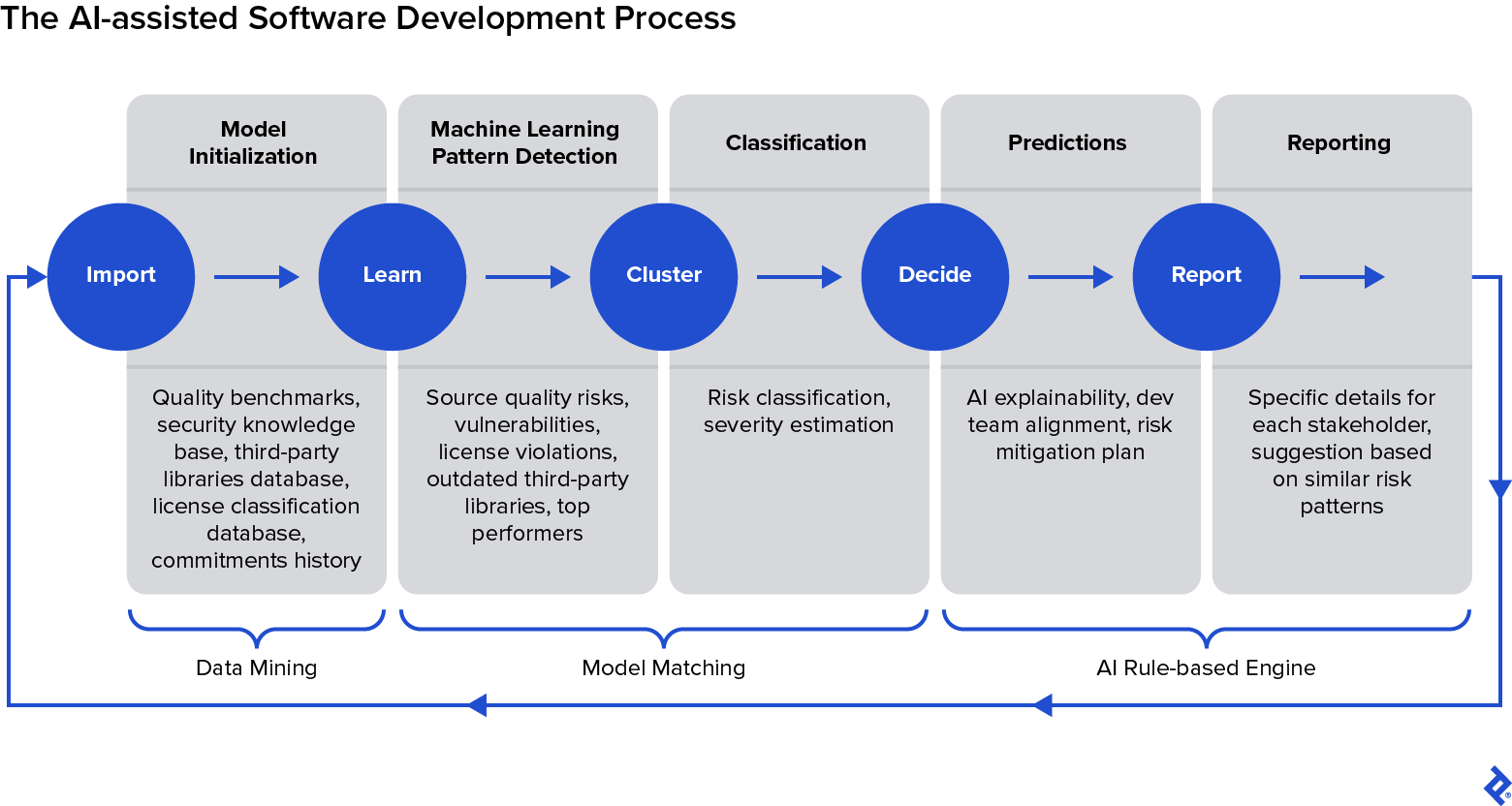

This three-step implementation framework introduces an easy set of AI for QA guidelines pushed by intensive code evaluation information to judge code high quality and optimize it utilizing a pattern-matching machine studying (ML) strategy. We estimate bug fixing prices by contemplating developer and tester productiveness throughout SDLC phases, evaluating productiveness charges to assets allotted for function growth: The upper the proportion of assets invested in function growth, the decrease the price of unhealthy high quality code and vice versa.

Outline High quality By means of Information Mining

The requirements for code high quality will not be straightforward to find out—high quality is relative and depends upon varied components. Any QA course of compares the precise state of a product with one thing thought-about “excellent.” Automakers, for instance, match an assembled automobile with the unique design for the automobile, contemplating the typical variety of imperfections detected over all of the pattern units. In fintech, high quality is often outlined by figuring out transactions misaligned with the authorized framework.

In software program growth, we are able to make use of a variety of instruments to research our code: linters for code scanning, static utility safety testing for recognizing safety vulnerabilities, software program composition evaluation for inspecting open-source parts, license compliance checks for authorized adherence, and productiveness evaluation instruments for gauging growth effectivity.

From the numerous variables our evaluation can yield, let’s deal with six key software program QA traits:

- Defect density: The variety of confirmed bugs or defects per measurement of the software program, sometimes measured per thousand strains of code

- Code duplications: Repetitive occurrences of the identical code inside a codebase, which may result in upkeep challenges and inconsistencies

- Hardcoded tokens: Fastened information values embedded straight into the supply code, which may pose a safety threat in the event that they embrace delicate data like passwords

- Safety vulnerabilities: Weaknesses or flaws in a system that could possibly be exploited to trigger hurt or unauthorized entry

- Outdated packages: Older variations of software program libraries or dependencies which will lack latest bug fixes or safety updates

- Nonpermissive open-source libraries: Open-source libraries with restrictive licenses can impose limitations on how the software program can be utilized or distributed

Corporations ought to prioritize probably the most related traits for his or her purchasers to attenuate change requests and upkeep prices. Whereas there could possibly be extra variables, the framework stays the identical.

After finishing this inside evaluation, it’s time to search for some extent of reference for high-quality software program. Product managers ought to curate a set of supply code from merchandise inside their similar market sector. The code of open-source initiatives is publicly obtainable and could be accessed from repositories on platforms equivalent to GitHub, GitLab, or the mission’s personal model management system. Select the identical high quality variables beforehand recognized and register the typical, most, and minimal values. They are going to be your high quality benchmark.

You shouldn’t evaluate apples to oranges, particularly in software program growth. If we had been to check the standard of 1 codebase to a different that makes use of a completely totally different tech stack, serves one other market sector, or differs considerably by way of maturity degree, the standard assurance conclusions could possibly be deceptive.

Prepare and Run the Mannequin

At this level within the AI-assisted QA framework, we have to prepare an ML mannequin utilizing the knowledge obtained within the high quality evaluation. This mannequin ought to analyze code, filter outcomes, and classify the severity of bugs and points in response to an outlined algorithm.

The coaching information ought to embody varied sources of knowledge, equivalent to high quality benchmarks, safety information databases, a third-party libraries database, and a license classification database. The standard and accuracy of the mannequin will depend upon the info fed to it, so a meticulous choice course of is paramount. I gained’t enterprise into the specifics of coaching ML fashions right here, as the main target is on outlining the steps of this novel framework. However there are a number of guides you’ll be able to seek the advice of that debate ML mannequin coaching intimately.

As soon as you might be comfy together with your ML mannequin, it’s time to let it analyze the software program and evaluate it to your benchmark and high quality variables. ML can discover hundreds of thousands of strains of code in a fraction of the time it could take a human to finish the duty. Every evaluation can yield precious insights, directing the main target towards areas that require enchancment, equivalent to code cleanup, safety points, or license compliance updates.

However earlier than addressing any concern, it’s important to outline which vulnerabilities will yield the very best outcomes for the enterprise if fastened, based mostly on the severity detected by the mannequin. Software program will all the time ship with potential vulnerabilities, however the product supervisor and product group ought to intention for a stability between options, prices, time, and safety.

As a result of this framework is iterative, each AI QA cycle will take the code nearer to the established high quality benchmark, fostering steady enchancment. This systematic strategy not solely elevates code high quality and lets the builders repair important bugs earlier within the growth course of, nevertheless it additionally instills a disciplined, quality-centric mindset in them.

Report, Predict, and Iterate

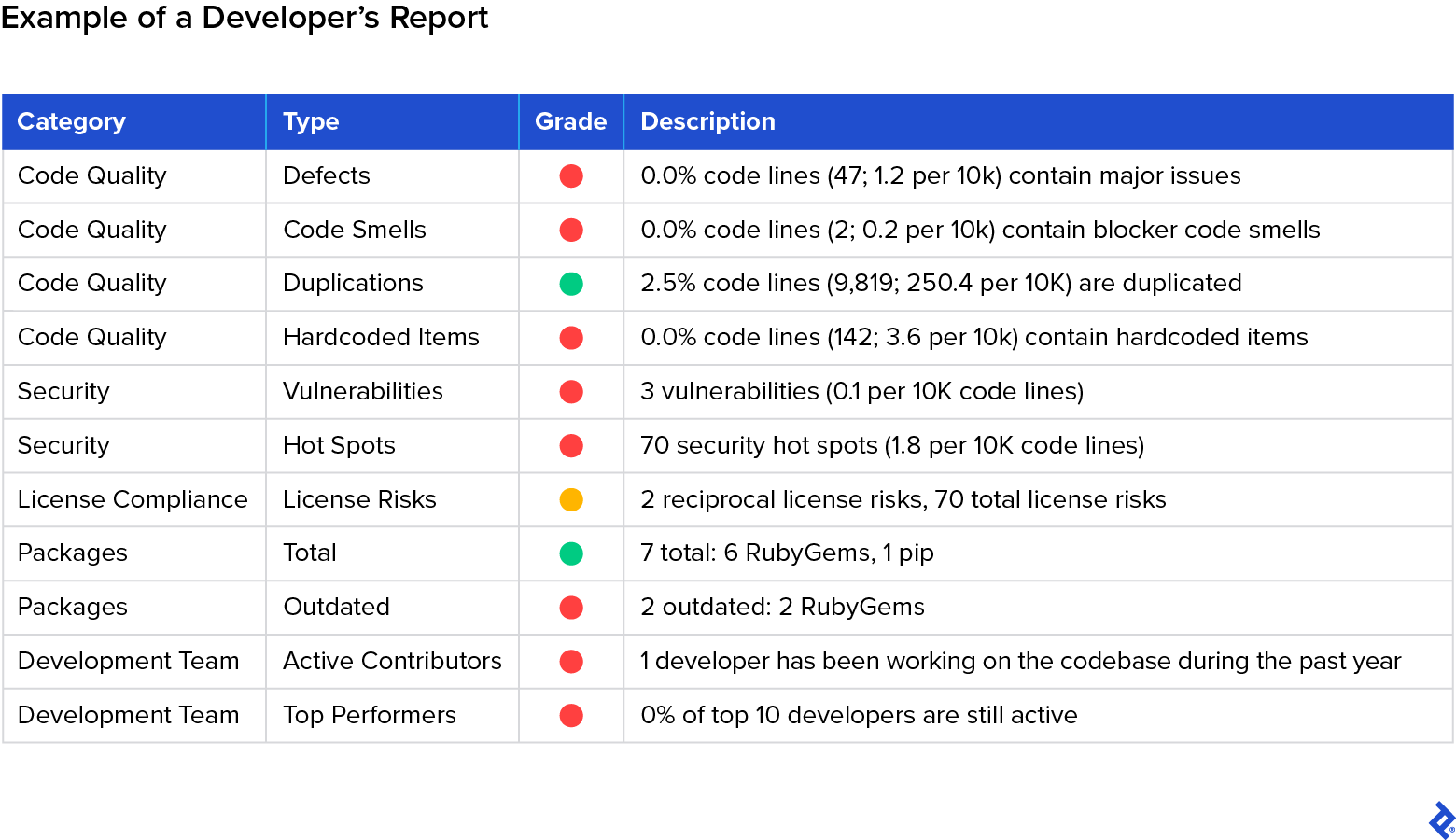

Within the earlier step, the ML mannequin analyzed the code towards the standard benchmark and supplied insights into technical debt and different areas in want of enchancment. Nonetheless, for a lot of stakeholders this information, as within the instance introduced beneath, gained’t imply a lot.

|

High quality |

445 bugs, 3,545 code smells |

~500 days |

Assuming that solely blockers and high-severity points will likely be resolved |

|

Safety |

55 vulnerabilities, 383 safety sizzling spots |

~100 days |

Assuming that each one vulnerabilities will likely be resolved and the higher-severity sizzling spots will likely be inspected |

|

Secrets and techniques |

801 hardcoded dangers |

~50 days |

|

|

Outdated Packages |

496 outdated packages (>3 years) |

~300 days |

|

|

Duplicated Blocks |

40,156 blocks |

~150 days |

Assuming that solely the larger blocks will likely be revised |

|

Excessive-risk Licenses |

20 points in React code |

~20 days |

Assuming that each one the problems will likely be resolved |

|

Whole |

1,120 days |

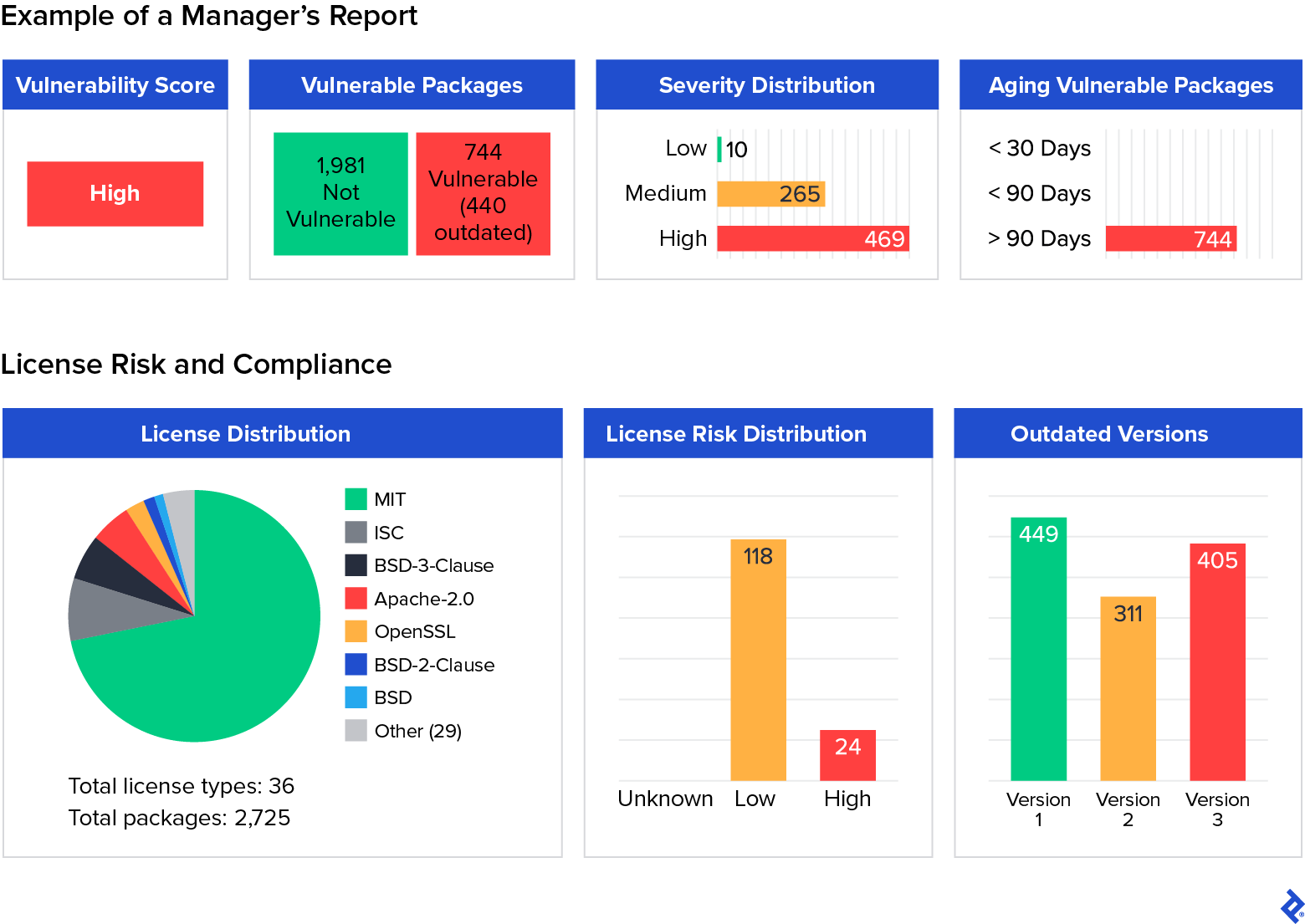

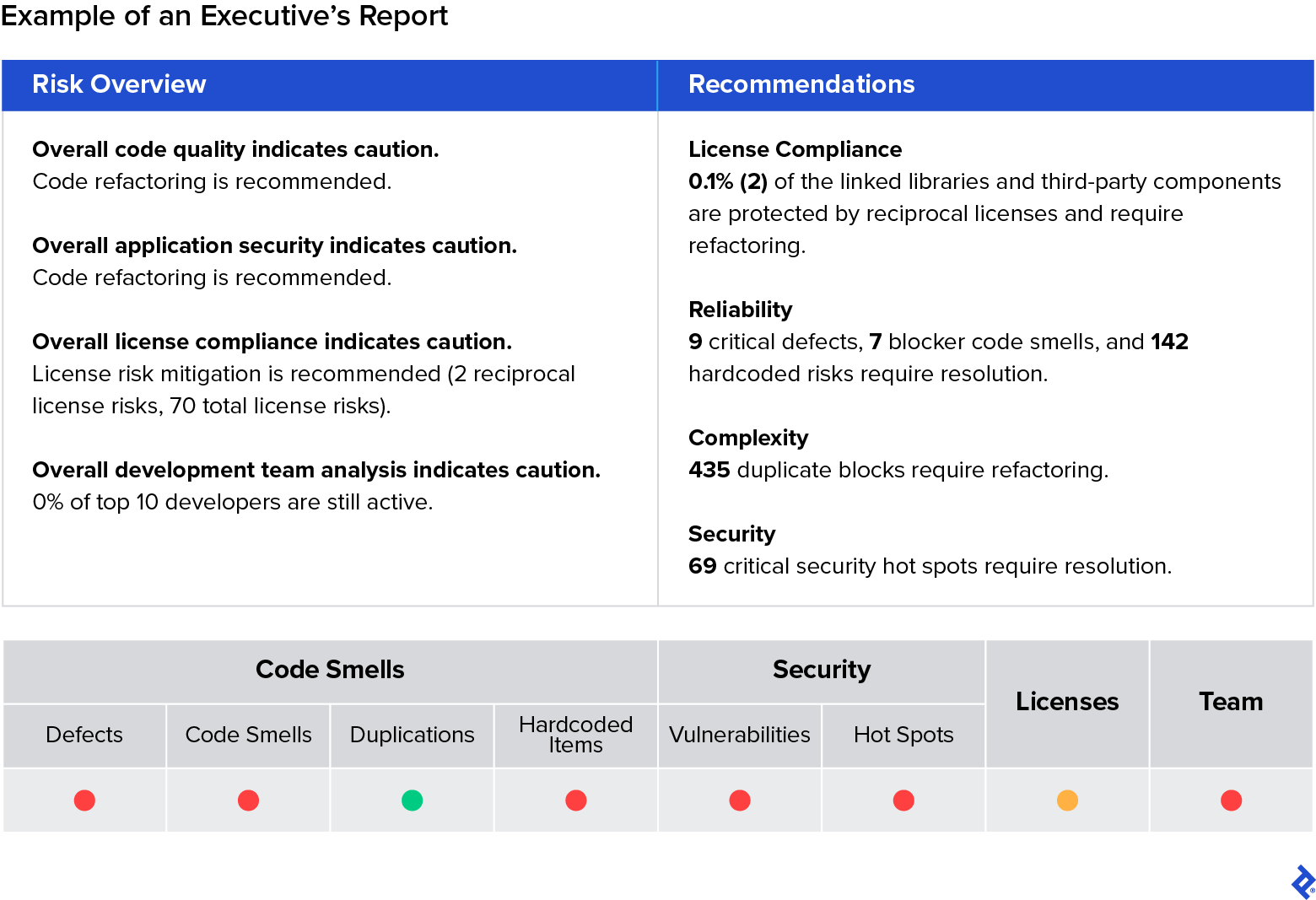

An automated reporting step is subsequently essential to make knowledgeable selections. We obtain this by feeding an AI rule engine with the knowledge obtained from the ML mannequin, information from the event group composition and alignment, and the danger mitigation methods obtainable to the corporate. This fashion, all three ranges of stakeholders (builders, managers, and executives) every obtain a catered report with probably the most salient ache factors for every, as could be seen within the following examples:

Moreover, a predictive element is activated when this course of iterates a number of occasions, enabling the detection of high quality variation spikes. For example, a discernible sample of high quality deterioration may emerge underneath circumstances beforehand confronted, equivalent to elevated commits throughout a launch part. This predictive side aids in anticipating and addressing potential high quality points preemptively, additional fortifying the software program growth course of towards potential challenges.

After this step, the method cycles again to the preliminary information mining part, beginning one other spherical of research and insights. Every iteration of the cycle leads to extra information and refines the ML mannequin, progressively enhancing the accuracy and effectiveness of the method.

Within the fashionable period of software program growth, hanging the precise stability between swiftly delivery merchandise and making certain their high quality is a cardinal problem for product managers. The unrelenting tempo of technological evolution mandates a sturdy, agile, and clever strategy towards managing software program high quality. The mixing of AI in high quality assurance mentioned right here represents a paradigm shift in how product managers can navigate this delicate stability. By adopting an iterative, data-informed, and AI-enhanced framework, product managers now have a potent instrument at their disposal. This framework facilitates a deeper understanding of the codebase, illuminates the technical debt panorama, and prioritizes actions that yield substantial worth, all whereas accelerating the standard assurance assessment course of.