Generative AI is remodeling numerous domains like content material creation, advertising and marketing, and healthcare by autonomously producing high-quality, various content material kinds. Its prowess in automating mundane duties and facilitating clever decision-making has led to its integration into varied enterprise purposes comparable to chatbots and predictive analytics. Nevertheless, a big problem presents itself: making certain that the generated content material is coherent and contextually related.

Enter pre-trained fashions. These fashions, already versed with intensive information, stand out in textual content era. However they’re not with out flaws — they typically require fine-tuning to satisfy the particular calls for of distinctive purposes or domains. Effective-tuning, the method of optimizing and customizing these fashions with new, related information, has thus change into an indispensable step in leveraging generative AI successfully.

This text goals to demystify key points of leveraging pre-trained fashions in generative AI purposes.

Pre-trained fashions have undergone coaching on intensive datasets, equipping them to deal with duties together with NLP, speech recognition, and picture recognition. They save time, cash, and sources, as they arrive with realized options and patterns, enabling builders and researchers to attain excessive accuracy with out ranging from scratch.

Common pre-trained fashions for generative AI purposes:

- GPT-3: Developed by OpenAI, it generates human-like textual content primarily based on prompts and is flexible for varied language-related duties.

- DALL-E: Additionally from OpenAI, it creates pictures from textual content descriptions and matches enter descriptions.

- BERT: Google’s mannequin is superb for duties like query answering, sentiment evaluation, and language translation.

- StyleGAN: NVIDIA’s mannequin generates high-quality pictures of animals, faces, and extra.

- VQGAN + CLIP: A mannequin from EleutherAI that mixes generative and language fashions to create pictures from textual prompts.

- Whisper: OpenAI’s versatile speech recognition mannequin handles multilingual speech recognition, speech translation, and language identification.

Effective-tuning is a technique used to optimize a mannequin’s efficiency for distinct duties or domains. For example, in healthcare, this system might refine fashions for specialised purposes like most cancers detection. On the coronary heart of fine-tuning lie pre-trained fashions, which have already undergone coaching on huge datasets for generic duties comparable to Pure Language Processing (NLP) or picture classification. As soon as this foundational coaching is full, the mannequin will be additional refined or ‘fine-tuned’ for associated duties which will have fewer labeled information factors accessible.

Central to the fine-tuning course of is the idea of switch studying. Right here, a pre-trained mannequin serves as a place to begin, and its information is leveraged to coach a brand new mannequin for a associated but distinct process. This strategy minimizes the necessity for giant volumes of labeled information, providing a strategic benefit in conditions the place acquiring such information is difficult or costly.

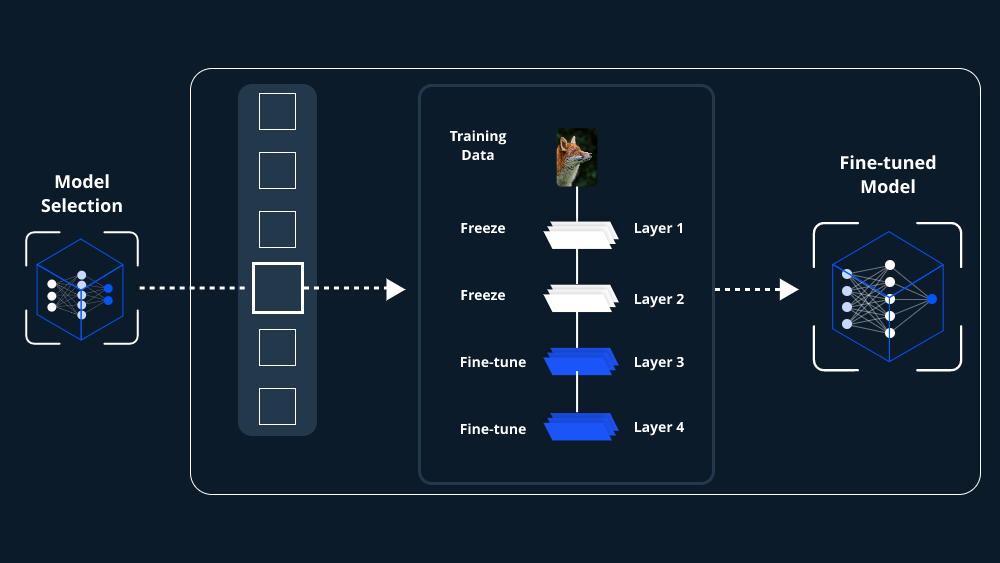

Effective-tuning a pre-trained mannequin entails updating its parameters with accessible labeled information reasonably than beginning the coaching course of from scratch. The method consists of the next steps:

- Loading the pre-trained mannequin: Start by deciding on and loading a pre-trained mannequin that has already realized from intensive information tailor-made to a associated process.

- Adapting the mannequin for the brand new process: After loading the pre-trained mannequin, modify its high layers to swimsuit the particular necessities of the brand new process. This adaptation is critical as the highest layers are sometimes task-specific.

- Freezing particular layers: Usually, earlier layers answerable for low-level characteristic extraction are frozen in a pre-trained mannequin. By doing so, the mannequin retains its realized basic options, which might forestall overfitting with the restricted labeled information accessible for the brand new process.

- Coaching the brand new layers: Make the most of the accessible labeled information to coach the newly launched layers whereas sustaining the weights of the prevailing layers as fixed. This enables the mannequin to adapt its parameters to the brand new process and refine its characteristic representations.

- Effective-tuning the mannequin: After coaching the brand new layers, you may fine-tune the entire mannequin on the brand new process, benefiting from the restricted information accessible.

When fine-tuning a pre-trained mannequin, adhering to finest practices is crucial for reaching favorable outcomes. Listed here are key pointers to contemplate:

- Perceive the pre-trained mannequin: Comprehensively grasp the structure, strengths, limitations, and authentic process of the pre-trained mannequin. This understanding informs mandatory modifications and changes.

- Select a related pre-trained mannequin: Choose a mannequin carefully aligned together with your goal process or area. Fashions skilled on related information or associated duties present a strong basis for fine-tuning.

- Freeze early layers: Protect the generic options and patterns realized by the decrease layers of the pre-trained mannequin by freezing them. This prevents the lack of priceless information and streamlines task-specific fine-tuning.

- Modify studying price: Experiment with completely different studying charges throughout fine-tuning, sometimes choosing a decrease price than within the preliminary pre-training part. Gradual adaptation helps forestall overfitting.

- Leverage switch studying strategies: Implement strategies like characteristic extraction or gradual unfreezing to reinforce fine-tuning. These strategies preserve and switch priceless information successfully.

- Apply mannequin regularization: To stop overfitting, make use of regularization strategies like dropout or weight decay as safeguards. These measures enhance generalization and scale back memorization of coaching examples.

- Repeatedly monitor efficiency: Commonly consider the fine-tuned mannequin on validation datasets, utilizing applicable metrics to information changes and refinements.

- Embrace information augmentation: Improve coaching information variety and generalizability by making use of transformations, perturbations, or noise. This follow results in extra strong fine-tuning outcomes.

- Take into account area adaptation: When the goal process considerably differs from pre-training information, discover area adaptation strategies to bridge the hole and improve mannequin efficiency.

- Save checkpoints commonly: Defend your progress and stop information loss by saving mannequin checkpoints steadily. This follow facilitates restoration and permits for the exploration of assorted fine-tuning methods.

Effective-tuning pre-trained fashions for generative AI purposes gives the next benefits:

- Time and useful resource financial savings: By leveraging pre-trained fashions, the necessity to construct fashions from scratch is eradicated, leading to a considerable period of time and useful resource financial savings.

- Customization for particular domains: Effective-tuning permits tailoring fashions to industry-specific use instances, enhancing efficiency and accuracy, particularly in area of interest purposes requiring domain-specific experience.

- Enhanced interpretability: Pre-trained fashions, having realized underlying information patterns, change into extra interpretable and simpler to grasp after fine-tuning.

Effective-tuning pre-trained fashions stands as a reliable methodology for creating top-quality generative AI purposes. It empowers builders to craft tailor-made fashions for industry-specific wants by harnessing the insights embedded in pre-existing fashions. This technique not solely conserves time and sources but in addition ensures the accuracy and resilience of fine-tuned fashions. It’s important to notice that fine-tuning will not be a universally relevant treatment and requires considerate and cautious dealing with.